Distilling Kimi Delta Attention into AFM-4.5B (and the Tool We Used to Do It)

Learn how Kimi Delta Attention was distilled into AFM-4.5B using knowledge distillation, long-context training, and Arcee’s open-source DistillKit.

Running language models on CPUs has been discussed for some time, but delivering accurate results with production-level performance remains unproven. So, is using CPUs for language models truly viable in production?

Running inference with large language models involves thousands of matrix multiplications, tensor operations, and other compute- and memory-intensive tasks. The need to perform these operations in parallel has made GPUs the natural choice for hosting models. However, GPUs come with one major drawback: everyone wants them, and they’re in limited supply, which drives up the cost.

This fact has inspired companies like Meta, Microsoft, Alibaba, and Arcee AI to release small language models (SLMs), such as Llama, Phi, Qwen, and AFM, which can run efficiently on low VRAM GPUs. However, even these GPUs can have a prohibitive price tag if not managed properly, which begs the question: can we run SLMs without GPUs?

In this blog, we’ll cover how small language models can run on CPUs. Using Arcee AI’s foundation model, AFM-4.5B, we’ll run benchmarks on Intel Sapphire Rapids, AWS Graviton4, and Qualcomm Z1E-80-100 processors at various quantizations, answering common questions along the way.

Reducing cost isn’t the only advantage of CPU inference. Having the flexibility to run models on widely available hardware means it’s now possible to host models anywhere you’d like, particularly on your private infrastructure. You won’t need to worry about whether a company is storing your prompts, using your data to train their next model, or where it may be sending your confidential data. This makes it easier to meet your security, compliance, and governance requirements.

Running language models on CPUs also opens up the possibility of edge and on-device deployment of models, where GPUs are extremely impractical due to technical and economic reasons. Running models close to the end user also helps improve the user experience by reducing or even eliminating connectivity issues, solving the painful problem of “What happens if I lose my internet connection?”

First, let’s address the elephant in the room. GPUs have thousands of cores and can handle massive amounts of parallel operations. Meanwhile, server CPUs typically have 64 cores and are designed to execute tasks sequentially. So, how can running models on a CPU deliver acceptable performance?

There are three primary reasons:

Recent CPU architectures feature specialized instruction sets that accelerate low-precision matrix multiplications and other neural network operations, significantly enhancing inference throughput.

For example, Intel Xeon Sapphire Rapids and Granite Rapids processors support hardware acceleration instruction sets, such as AVX-512, Vector Neural Network Instructions (VNNI), and Advanced Matrix Extensions (AMX). VNNI helps reduce the number of instructions required for common deep learning operations, such as convolutions and dot products, by fusing multiple steps into a single instruction. AMX further accelerates matrix-heavy workloads by introducing dedicated matrix multiplication hardware and larger tile-based registers. Together, these technologies significantly increase computational throughput and efficiency, making CPUs more competitive for AI inference workloads.

Likewise, AWS Graviton4 processors, based on the Arm Neoverse V2 architecture, offer significant improvements in performance and efficiency for a wide range of workloads, including deep learning inference. Graviton4 features enhanced vector processing capabilities through the Scalable Vector Extension (SVE). These vector instructions enable more efficient execution of parallel computations, such as matrix multiplications and tensor operations, which are crucial for running language models. With higher memory bandwidth, Graviton4 makes Arm-based CPUs an increasingly viable option for cost-effective AI inference at scale.

Model serving libraries, such as llama.cpp, take advantage of modern CPU instruction sets during inference to accelerate key operations. These libraries operate under constrained compute and memory environments—especially when running on CPUs instead of GPUs—so they employ a variety of clever optimization techniques to maximize efficiency.

To reduce memory overhead, models are typically memory-mapped, a technique that allows only the necessary parts of the model to be loaded into RAM on demand rather than keeping the entire model in memory. This enables larger models to run on systems with limited RAM.

A notable recent innovation is block interleaved weight repacking, which reorganizes model weights to better align with the wider vector registers and fused instructions available in modern CPUs (such as AVX-512, VNNI, and AMX). This approach significantly improves prompt processing speeds by allowing more efficient use of the CPU’s SIMD (single instruction, multiple data) capabilities.

On the compute side, model-serving libraries use multi-threading to parallelize tensor operations across multiple CPU cores. By distributing the workload effectively—often by assigning different layers or chunks of computation to separate threads—they’re able to achieve much higher throughput and better latency, making CPU-based inference increasingly practical for real-world applications.

Of course, none of the hardware or library improvements are relevant if there isn’t a small enough model that can fit within the compute and memory constraints of a CPU and is accurate enough to be used by businesses. Fortunately, over the past few years, models have continued to improve at an impressive rate, and smaller models are now approaching the same accuracy and capability levels as larger models. AFM-4.5B is an example of this.

It’s important to note that 4.5 billion isn’t the largest model you can run on a CPU. We’ve found that even 70B models can run on a CPU with 4-bit quantization. However, the text generation speed is significantly slower than 10 tokens per second, which is too slow for comfort. For practical deployments, 8B models quantized to 4-bit, and 4B models using bfloat16 to 8-bit precision have shown strong performance.

Now that we know it's possible to run models on a CPU, is it really viable? Can we achieve performance and maintain model accuracy that meets the requirements of an enterprise scenario? Spoiler alert: the answer is yes, and let’s dive into the proof.

We evaluated AFM-4.5B utilizing llama.cpp on:

On each platform, we conducted 140 evaluations, combining different quantized variants of AFM-4.5B, input and output token lengths, and batch sizes. We recorded the size of the KV cache, prompt processing speed, token generation speed, overall latency, and other metrics. The quantization variants include bf16 (16-bit floating point), Q8_0 (Type 0, 8-bit), Q4_K_M (Medium K-means, 4-bit), Q4_1 (Type 1, 4-bit), and Q4_0 (Type 0, 4-bit), all in the llama.cpp GGUF format.

If you’re familiar with quantization, you may now be thinking, “Quantizing down to 8 and 4 bits must really reduce the accuracy of the model.” For basic quantization techniques, you’d be correct. However, current techniques, such as K-Quants and I-Quants, implement clever algorithms to maintain more relevant information at lower quantizations. For example, when measuring the perplexity of AFM-4.5B, we found that quantizing from bf-16 to 8-bit had no impact. Interestingly, going from 8-bit to 4-bit only increased perplexity by 1% (lower perplexity is better).

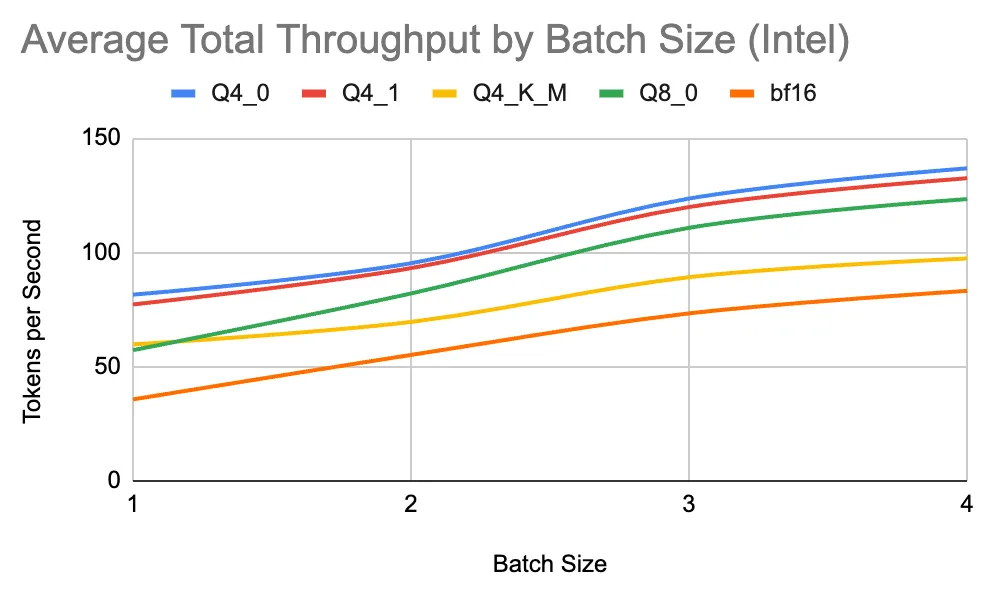

We’ll start by looking at AFM-4.5B on the Intel Xeon Sapphire Rapids Processor. When utilizing all 32 threads, we achieved an average of 136.77 total tokens per second (TPS) for the Q4_0 quantized variant with a batch size of 4. With the same configurations, the bf16 variant achieved an average of 83.13 TPS. These numbers are quite impressive!

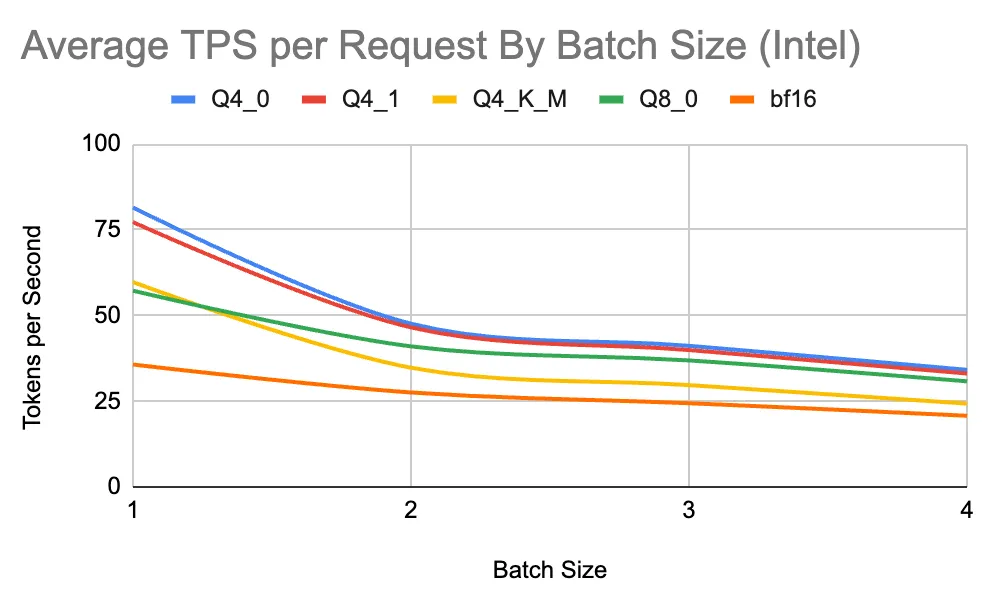

As expected, given the limited parallelism of CPUs, we observe below a drop in TPS per request as the batch size increases; however, with the lowest TPS of 20.79 for AFM-4.5B in bf16, this configuration provides viable speed for the vast majority of production use cases.

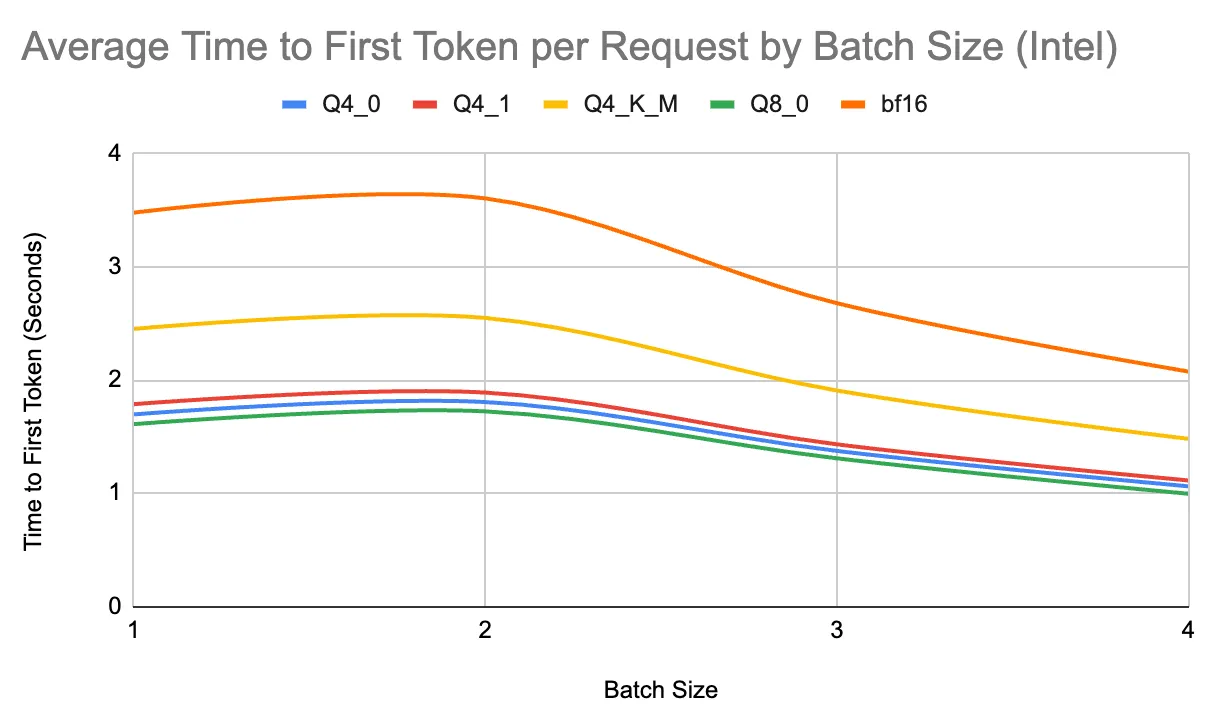

Now, let’s examine the time to first token (TTFT). Besides networking latency and handling request queues, the primary contributor to TTFT is populating the KV cache, also known as pre-fill. This process involves the self-attention mechanism calculating the interactions between each token in the input sequence and all other tokens in the sequence. Given that CPUs don’t have the parallelization capabilities of GPUs, we’d expect this process to take significantly longer than on GPUs.

However, as shown below, the TTFT remains very manageable, with smaller quantizations staying below 2 seconds for all batch sizes and bf16 approaching 2 seconds (specifically, 2.075 seconds) for batch sizes of 4.

Note: We used continuous batching, which helps reduce TTFT as the batch size increases.

AWS Graviton 4

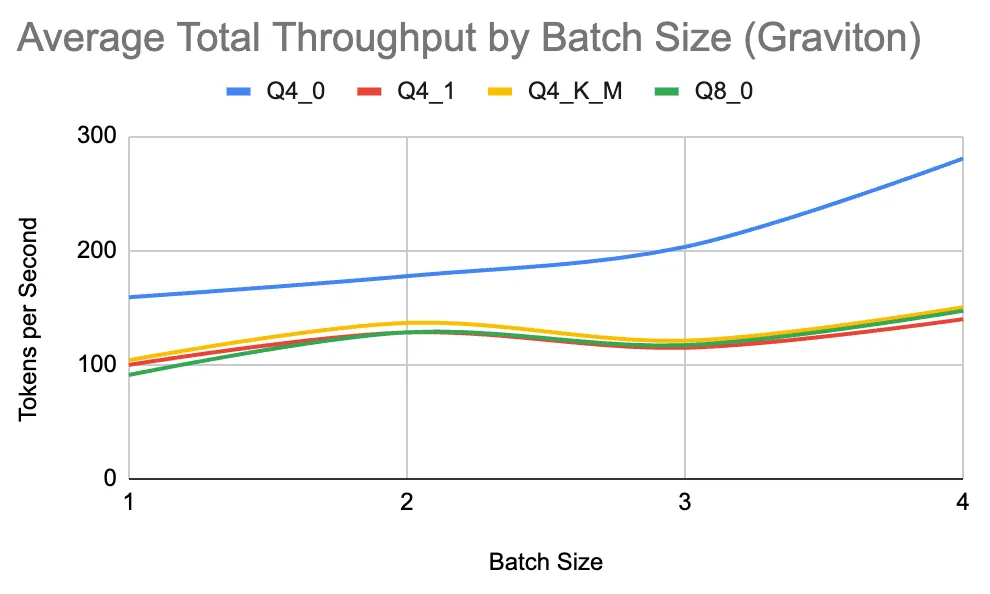

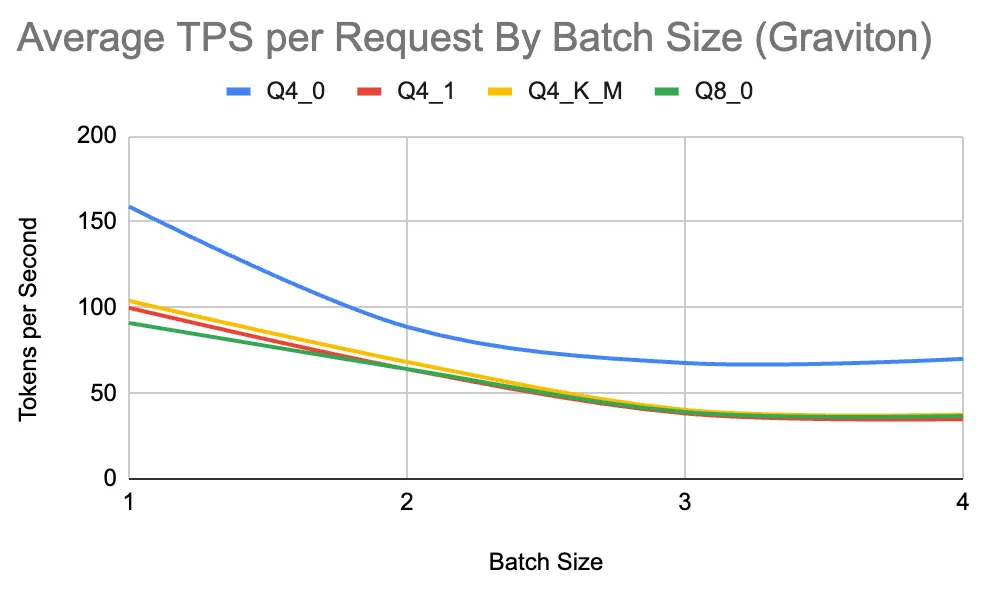

Graviton is an ARM-based processor developed by Amazon Web Services, designed to deliver the best cost-performance ratio for cloud computing workloads. In our tests, Graviton performs exceptionally well for 4- and 8-bit quantized variants of AFM-4.5B, with Q4_0 reaching above 280 TPS at a batch size of 4.

It’s important to note that we did not include BF16 in these graphs because, at the time of publishing this blog, llama.cpp does not leverage ARM bf16 or SVEbf16 instructions to accelerate CPU inference. If you load a BF16 variant of a model, the data is upcast to FP32, resulting in very poor performance (~2 TPS at all batch sizes). We felt that this was not a fair comparison to include.

The per-request TPS remains extremely viable at a batch size of 4, maintaining throughput of around 35-40 TPS.

Time-to-first-token increases slightly compared to the Intel processor.

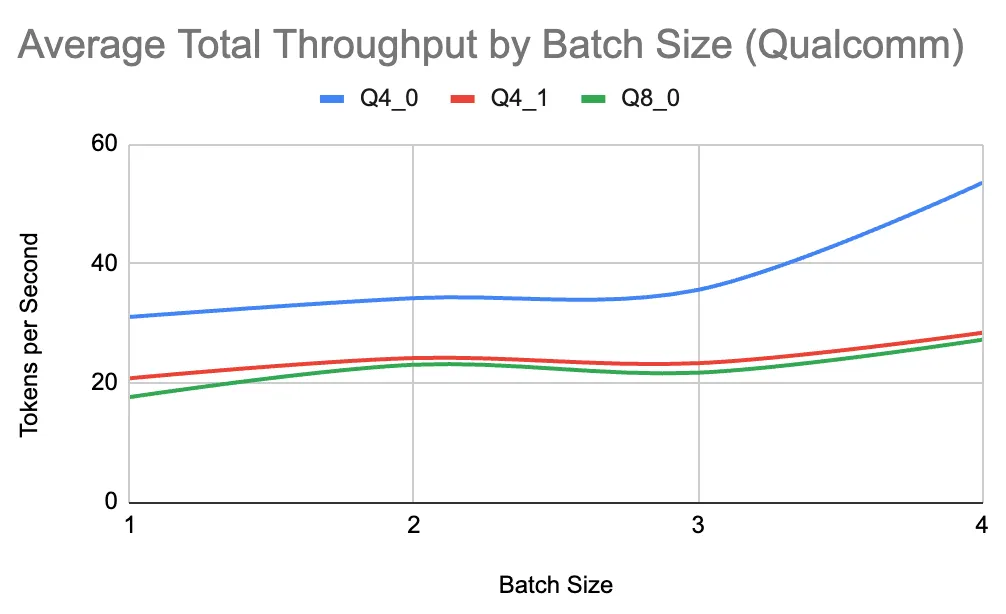

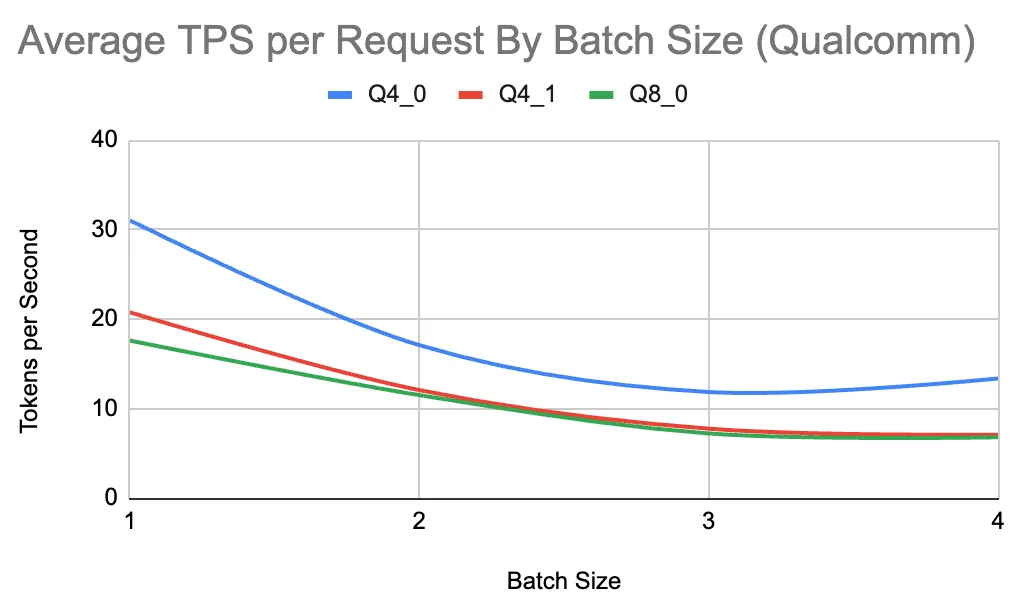

The final hardware we tested was a personal laptop with a Qualcomm X1E-80-100 processor. We conducted this evaluation to see how AFM-4.5B would perform on a smaller CPU. The Qualcomm processor has only 12 logical cores compared to the 32 that the Intel Sapphire Rapids and AWS Graviton processors have. We were pleased to discover that Q8_0, Q4_1, and Q4_0 were all viable options.

Both total throughput and average TPS per request perform as expected on the smaller hardware. An interesting finding during the evaluation was the significant impact repacking had on this processor. Repacking is the process of dynamically reorganizing quantized model weights in memory during loading in order to optimize them for a specific CPU architecture. We found that repacking nearly doubled prompt processing speed and increased text generation speed by ~50%.

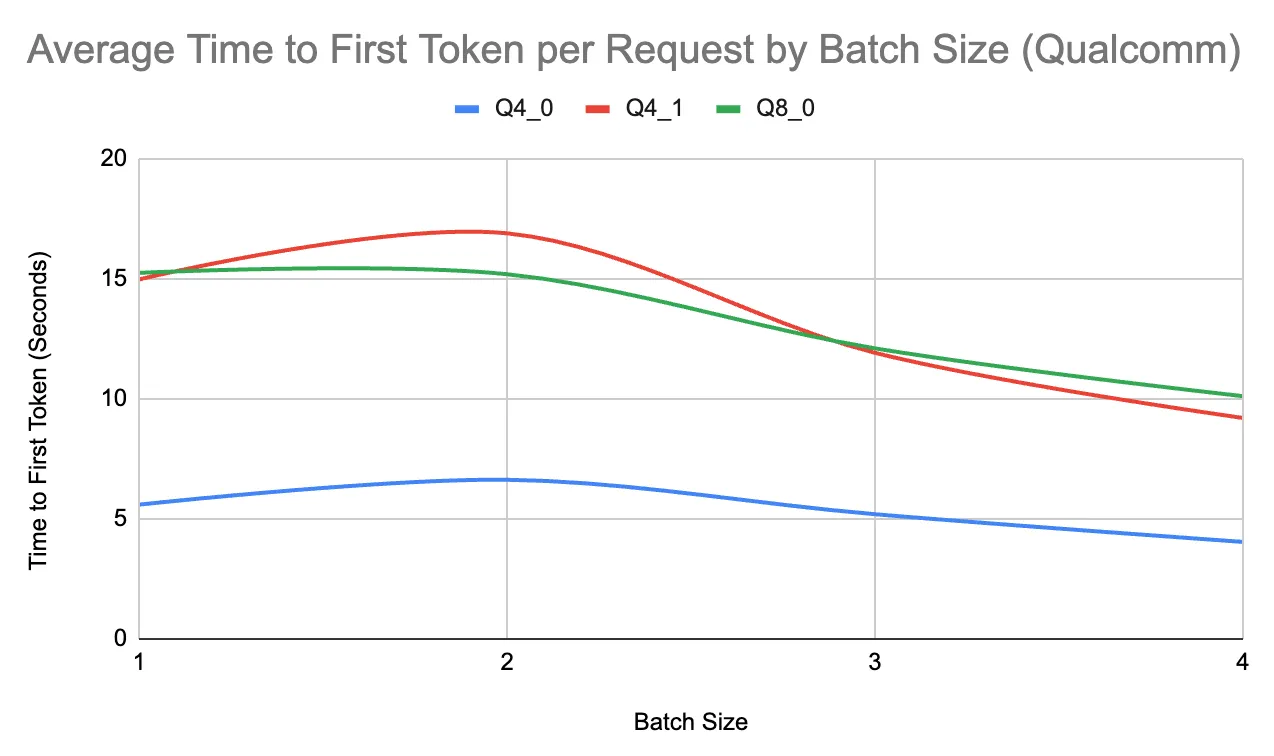

Time-to-first token was drastically higher than the Intel and Graviton tests. A major consideration here is how much the prompt length impacted TTFT. One would expect that longer prompt lengths would increase TTFT; however, we found a larger impact during the Qualcomm test.

For example, when the input prompt was 128 tokens for the Q4_0 variant at a batch size of 1, the average TTFT was 0.94 seconds. This time increased to 10.94 seconds when the input prompt expanded to 1024 tokens. Similarly, the average TTFT for 128 input tokens with the Q8_0 variant at a batch size of 1 was 3.45 seconds, and it increased to 27.83 seconds for 1024 input tokens.

Thus, it would be reasonable to limit inference on the Qualcomm X1E-80-100 processor to short-context questions, excluding applications like Retrieval-Augmented Generation or document analysis.

The results above demonstrate that SLM inference on CPUs can achieve a production-level performance; however, performance is fully dependent on the requirements of your use case. Based on our findings, to determine if you should use CPU inference, you should consider the following:

While GPUs still dominate for high-throughput, low-latency workloads, CPUs—when paired with small, well-quantized models and optimized serving libraries—can absolutely deliver production-level performance. Our benchmarks demonstrate that, with the right hardware, quantization strategy, and batch size tuning, CPUs are not only viable but can also be an excellent choice for cost-sensitive, latency-tolerant, and privacy-conscious deployments.

Ultimately, CPU inference won’t replace GPUs for every use case. Still, it opens up new possibilities—especially for edge, on-device, and secure enterprise applications where GPU access is limited or impractical. As small model quality continues to improve and hardware acceleration becomes more capable, the case for running language models on CPUs will only get stronger.

If you’re curious about whether running an SLM on a CPU would work for your use case, please contact the Arcee AI team here.