Open Models and Libraries

Open-Weight Models

Permissive Apache 2.0 license.

Trinity Nano Preview

Overview

Trinity Nano Preview is a preview of Arcee AI's 6B MoE model with 1B active parameters. It is the small-sized model in our new Trinity family, a series of open-weight models for enterprise and tinkerers alike.

This is a chat tuned model, with a delightful personality and charm we think users will love. We note that this model is pushing the limits of sparsity in small language models with only 800M non-embedding parameters active per token, and as such may be unstable in certain use cases, especially in this preview.

This is an experimental release, it's fun to talk to but will not be hosted anywhere, so download it and try it out yourself!

Trinity Nano Preview is trained on 10T tokens gathered and curated through a key partnership with Datology, building upon the excellent dataset we used on AFM-4.5B with additional math and code.

Training was performed on a cluster of 512 H200 GPUs powered by Prime Intellect using HSDP parallelism.

More details, including key architecture decisions, can be found on our blog here

Model Details

- Model Architecture: AfmoeForCausalLM

- Parameters: 6B, 1B active

- Experts: 128 total, 8 active, 1 shared

- Context length: 128k

- Training Tokens: 10T

- License: Apache 2.0

Running our model

Transformers

Use the main transformers branch

git clone https://github.com/huggingface/transformers.git

cd transformers

# pip

pip install '.[torch]'

# uv

uv pip install '.[torch]'from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "arcee-ai/Trinity-Nano-Preview"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto"

)

messages = [

{"role": "user", "content": "Who are you?"},

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

outputs = model.generate(

input_ids,

max_new_tokens=256,

do_sample=True,

temperature=0.5,

top_k=50,

top_p=0.95

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)If using a released transformers, simply pass "trust_remote_code=True":

model_id = "arcee-ai/Trinity-Nano-Preview"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True

)VLLM

Supported in VLLM release 0.11.1

# pip

pip install "vllm>=0.11.1"Serving the model with suggested settings:

vllm serve arcee-train/Trinity-Nano-Preview \

--dtype bfloat16 \

--enable-auto-tool-choice \

--reasoning-parser deepseek_r1 \

--tool-call-parser hermesllama.cpp

Supported in llama.cpp release b7061

Download the latest llama.cpp release

llama-server -hf arcee-ai/Trinity-Nano-Preview-GGUF:q4_k_mLM Studio

Supported in latest LM Studio runtime

Update to latest available, then verify your runtime by:

- Click "Power User" at the bottom left

- Click the green "Developer" icon at the top left

- Select "LM Runtimes" at the top

- Refresh the list of runtimes and verify that the latest is installed

Then, go to Model Search and search for arcee-ai/Trinity-Nano-Preview-GGUF, download your prefered size, and load it up in the chat

License

Trinity-Nano-Preview is released under the Apache-2.0 license.

Trinity Mini

Overview

Trinity Mini is an Arcee AI 26B MoE model with 3B active parameters. It is the medium-sized model in our new Trinity family, a series of open-weight models for enterprise and tinkerers alike.

This model is tuned for reasoning, but in testing, it uses a similar total token count to competitive instruction-tuned models.

Trinity Mini is trained on 10T tokens gathered and curated through a key partnership with Datology, building upon the excellent dataset we used on AFM-4.5B with additional math and code.

Training was performed on a cluster of 512 H200 GPUs powered by Prime Intellect using HSDP parallelism.

More details, including key architecture decisions, can be found on our blog here

Try it out now at chat.arcee.ai

Model Details

- Model Architecture: AfmoeForCausalLM

- Parameters: 26B, 3B active

- Experts: 128 total, 8 active, 1 shared

- Context length: 128k

- Training Tokens: 10T

- License: Apache 2.0

- Recommended settings:

- temperature: 0.15

- top_k: 50

- top_p: 0.75

- min_p: 0.06

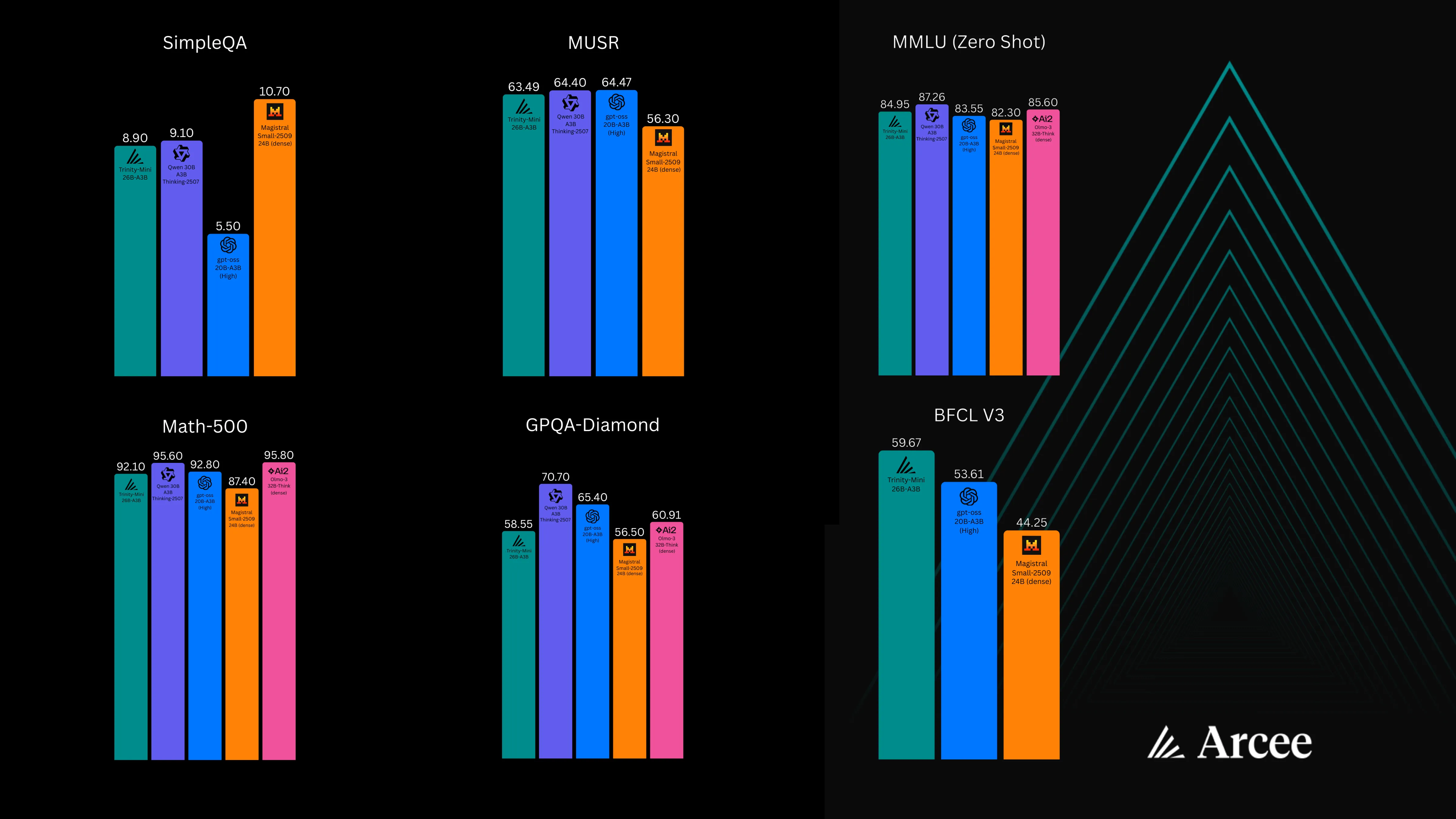

Benchmarks

Running our model

Transformers

Use the main transformers branch

git clone https://github.com/huggingface/transformers.git

cd transformers

# pip

pip install '.[torch]'

# uv

uv pip install '.[torch]'from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "arcee-ai/Trinity-Mini"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto"

)

messages = [

{"role": "user", "content": "Who are you?"},

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

outputs = model.generate(

input_ids,

max_new_tokens=256,

do_sample=True,

temperature=0.5,

top_k=50,

top_p=0.95

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)If using a released transformers, simply pass "trust_remote_code=True":

model_id = "arcee-ai/Trinity-Mini"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto",

trust_remote_code=True

)VLLM

Supported in VLLM release 0.11.1

# pip

pip install "vllm>=0.11.1"Serving the model with suggested settings:

vllm serve arcee-train/Trinity-Mini \

--dtype bfloat16 \

--enable-auto-tool-choice \

--reasoning-parser deepseek_r1 \

--tool-call-parser hermesllama.cpp

Supported in llama.cpp release b7061

Download the latest llama.cpp release

llama-server -hf arcee-ai/Trinity-Mini-GGUF:q4_k_m \

--temp 0.15 \

--top-k 50 \

--top-p 0.75

--min-p 0.06LM Studio

Supported in latest LM Studio runtime

Update to latest available, then verify your runtime by:

- Click "Power User" at the bottom left

- Click the green "Developer" icon at the top left

- Select "LM Runtimes" at the top

- Refresh the list of runtimes and verify that the latest is installed

Then, go to Model Search and search for arcee-ai/Trinity-Mini-GGUF, download your prefered size, and load it up in the chat

API

Trinity Mini is available today on openrouter:

https://openrouter.ai/arcee-ai/trinity-mini

curl -X POST "https://openrouter.ai/v1/chat/completions" \

-H "Authorization: Bearer $OPENROUTER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "arcee-ai/trinity-mini",

"messages": [

{

"role": "user",

"content": "What are some fun things to do in New York?"

}

]

}'License

Trinity-Mini is released under the Apache-2.0 license.

AFM 4.5B

Overview

AFM-4.5B is a 4.5 billion parameter instruction-tuned model developed by Arcee.ai, designed for enterprise-grade performance across diverse deployment environments from cloud to edge. The base model was trained on a dataset of 8 trillion tokens, comprising 6.5 trillion tokens of general pretraining data followed by 1.5 trillion tokens of midtraining data with enhanced focus on mathematical reasoning and code generation. Following pretraining, the model underwent supervised fine-tuning on high-quality instruction datasets. The instruction-tuned model was further refined through reinforcement learning on verifiable rewards as well as for human preference. We use a modified version of TorchTitan for pretraining, Axolotl for supervised fine-tuning, and a modified version of Verifiers for reinforcement learning.

The development of AFM-4.5B prioritized data quality as a fundamental requirement for achieving robust model performance. We collaborated with DatologyAI, a company specializing in large-scale data curation. DatologyAI's curation pipeline integrates a suite of proprietary algorithms—model-based quality filtering, embedding-based curation, target distribution-matching, source mixing, and synthetic data. Their expertise enabled the creation of a curated dataset tailored to support strong real-world performance.

The model architecture follows a standard transformer decoder-only design based on Vaswani et al., incorporating several key modifications for enhanced performance and efficiency. Notable architectural features include grouped query attention for improved inference efficiency and ReLU^2 activation functions instead of SwiGLU to enable sparsification while maintaining or exceeding performance benchmarks.

The model available in this repo is the instruct model following supervised fine-tuning and reinforcement learning.

View our documentation here for more details: https://docs.arcee.ai/arcee-foundation-models/introduction-to-arcee-foundation-models

Model Details

- Model Architecture: ArceeForCausalLM

- Parameters: 4.5B

- Training Tokens: 8T

- License: Apache 2.0

- Recommended settings:

- temperature: 0.5

- top_k: 50

- top_p: 0.95

- repeat_penalty: 1.1

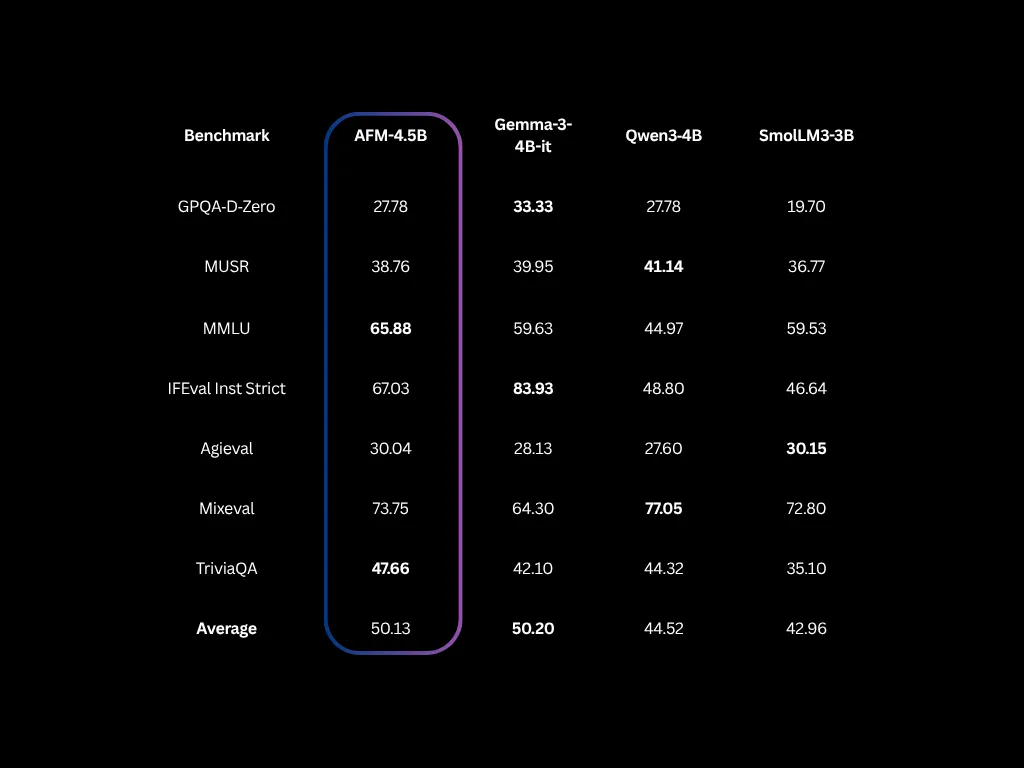

Benchmarks

*Qwen3 and SmolLM's reasoning approach causes their scores to vary wildly from suite to suite - but these are all scores on our internal harness with the same hyperparameters. Be sure to reference their reported scores. SmolLM just released its bench.

How to use with transformers

You can use the model directly with the transformers library.

We recommend a lower temperature, around 0.5, for optimal performance.

from transformers import AutoTokenizer, AutoModelForCausalLM

import torch

model_id = "arcee-ai/AFM-4.5B"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto"

)

messages = [

{"role": "user", "content": "Who are you?"},

]

input_ids = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

return_tensors="pt"

).to(model.device)

outputs = model.generate(

input_ids,

max_new_tokens=256,

do_sample=True,

temperature=0.5,

top_k=50,

top_p=0.95

)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(response)How to use with vllm

Ensure you are on version 0.10.1 or newer

pip install vllm>=0.10.1You can then serve the model natively

vllm serve arcee-ai/AFM-4.5BHow to use with Together API

You can access this model directly via the Together Playground.

Python (Official Together SDK)

from together import Together

client = Together()

response = client.chat.completions.create(

model="arcee-ai/AFM-4.5B",

messages=[

{

"role": "user",

"content": "What are some fun things to do in New York?"

}

]

)

print(response.choices[0].message.content)cURL

curl -X POST "https://api.together.xyz/v1/chat/completions" \

-H "Authorization: Bearer $TOGETHER_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "arcee-ai/AFM-4.5B",

"messages": [

{

"role": "user",

"content": "What are some fun things to do in New York?"

}

]

}'Quantization support

Support for llama.cpp and Intel OpenVINO is available:

https://huggingface.co/arcee-ai/AFM-4.5B-GGUF

https://huggingface.co/arcee-ai/AFM-4.5B-ov

License

AFM-4.5B is released under the Apache-2.0 license.

Arcee-SuperNova-Medius

Overview

Arcee-SuperNova-Medius is a 14B parameter language model developed by Arcee.ai, built on the Qwen2.5-14B-Instruct architecture. This unique model is the result of a cross-architecture distillation pipeline, combining knowledge from both the Qwen2.5-72B-Instruct model and the Llama-3.1-405B-Instruct model. By leveraging the strengths of these two distinct architectures, SuperNova-Medius achieves high-quality instruction-following and complex reasoning capabilities in a mid-sized, resource-efficient form.

SuperNova-Medius is designed to excel in a variety of business use cases, including customer support, content creation, and technical assistance, while maintaining compatibility with smaller hardware configurations. It’s an ideal solution for organizations looking for advanced capabilities without the high resource requirements of larger models like our SuperNova-70B.

Distillation Overview

The development of SuperNova-Medius involved a sophisticated multi-teacher, cross-architecture distillation process, with the following key steps:

- Logit Distillation from Llama 3.1 405B:

- We distilled the logits of Llama 3.1 405B using an offline approach.

- The top K logits for each token were stored to capture most of the probability mass while managing storage requirements.

- Cross-Architecture Adaptation:

- Using mergekit-tokensurgeon, we created a version of Qwen2.5-14B that uses the vocabulary of Llama 3.1 405B.

- This allowed for the use of Llama 3.1 405B logits in training the Qwen-based model.

- Distillation to Qwen Architecture:

- The adapted Qwen2.5-14B model was trained using the stored 405B logits as the target.

- Parallel Qwen Distillation:

- In a separate process, Qwen2-72B was distilled into a 14B model.

- Final Fusion and Fine-Tuning:

- The Llama-distilled Qwen model's vocabulary was reverted to Qwen vocabulary.

- After re-aligning the vocabularies, a final fusion and fine-tuning step was conducted, using a specialized dataset from EvolKit to ensure that SuperNova-Medius maintained coherence, fluency, and context understanding across a broad range of tasks.

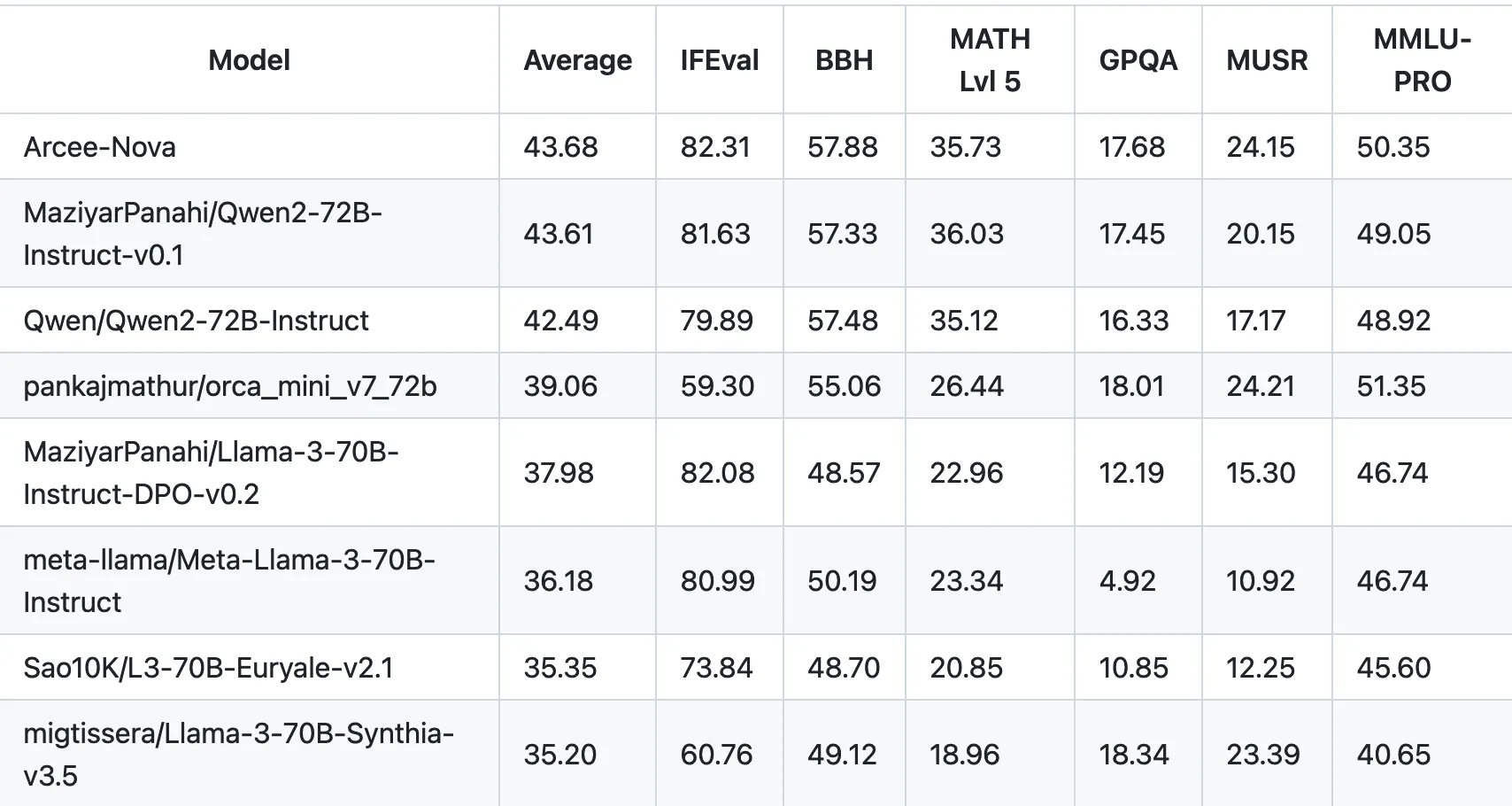

Performance Evaluation

Below are the benchmark results of SuperNova-Medius compared to similar models in its class:\

SuperNova-Medius performs exceptionally well in instruction-following (IFEval) and complex reasoning tasks (BBH), demonstrating its capability to handle a variety of real-world scenarios. It outperforms Qwen2.5-14B and SuperNova-Lite in multiple benchmarks, making it a powerful yet efficient choice for high-quality generative AI applications.

Model Use Cases

Arcee-SuperNova-Medius is suitable for a range of applications, including:

- Customer Support: With its robust instruction-following and dialogue management capabilities, SuperNova-Medius can handle complex customer interactions, reducing the need for human intervention.

- Content Creation: The model’s advanced language understanding and generation abilities make it ideal for creating high-quality, coherent content across diverse domains.

- Technical Assistance: SuperNova-Medius has a deep reservoir of technical knowledge, making it an excellent assistant for programming, technical documentation, and other expert-level content creation.

Deployment Options

SuperNova-Medius is available for use under the Apache-2.0 license. For those who need even higher performance, the full-size 70B SuperNova model can be accessed via an Arcee-hosted API or for local deployment. To learn more or explore deployment options, please reach out to sales@arcee.ai.

Technical Specifications

- Model Architecture: Qwen2.5-14B-Instruct

- Distillation Sources: Qwen2.5-72B-Instruct, Llama-3.1-405B-Instruct

- Parameter Count: 14 billion

- Training Dataset: Custom instruction dataset generated with EvolKit

- Distillation Technique: Multi-architecture offline logit distillation with cross-architecture vocabulary alignment.

Summary

Arcee-SuperNova-Medius provides a unique balance of power, efficiency, and versatility. By distilling knowledge from two top-performing teacher models into a single 14B parameter model, SuperNova-Medius achieves results that rival larger models while maintaining a compact size ideal for practical deployment. Whether for customer support, content creation, or technical assistance, SuperNova-Medius is the perfect choice for organizations looking to leverage advanced language model capabilities in a cost-effective and accessible form.

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

Arcee-SuperNova-Lite

Overview

Llama-3.1-SuperNova-Lite is an 8B parameter model developed by Arcee.ai, based on the Llama-3.1-8B-Instruct architecture. It is a distilled version of the larger Llama-3.1-405B-Instruct model, leveraging offline logits extracted from the 405B parameter variant. This 8B variation of Llama-3.1-SuperNova maintains high performance while offering exceptional instruction-following capabilities and domain-specific adaptability.

The model was trained using a state-of-the-art distillation pipeline and an instruction dataset generated with EvolKit, ensuring accuracy and efficiency across a wide range of tasks. For more information on its training, visit blog.arcee.ai.

Llama-3.1-SuperNova-Lite excels in both benchmark performance and real-world applications, providing the power of large-scale models in a more compact, efficient form ideal for organizations seeking high performance with reduced resource requirements.

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

Arcee Spark

Overview

Arcee Spark is a powerful 7B parameter language model that punches well above its weight class. Initialized from Qwen2, this model underwent a sophisticated training process:

- Fine-tuned on 1.8 million samples

- Merged with Qwen2-7B-Instruct using Arcee's mergekit

- Further refined using Direct Preference Optimization (DPO)

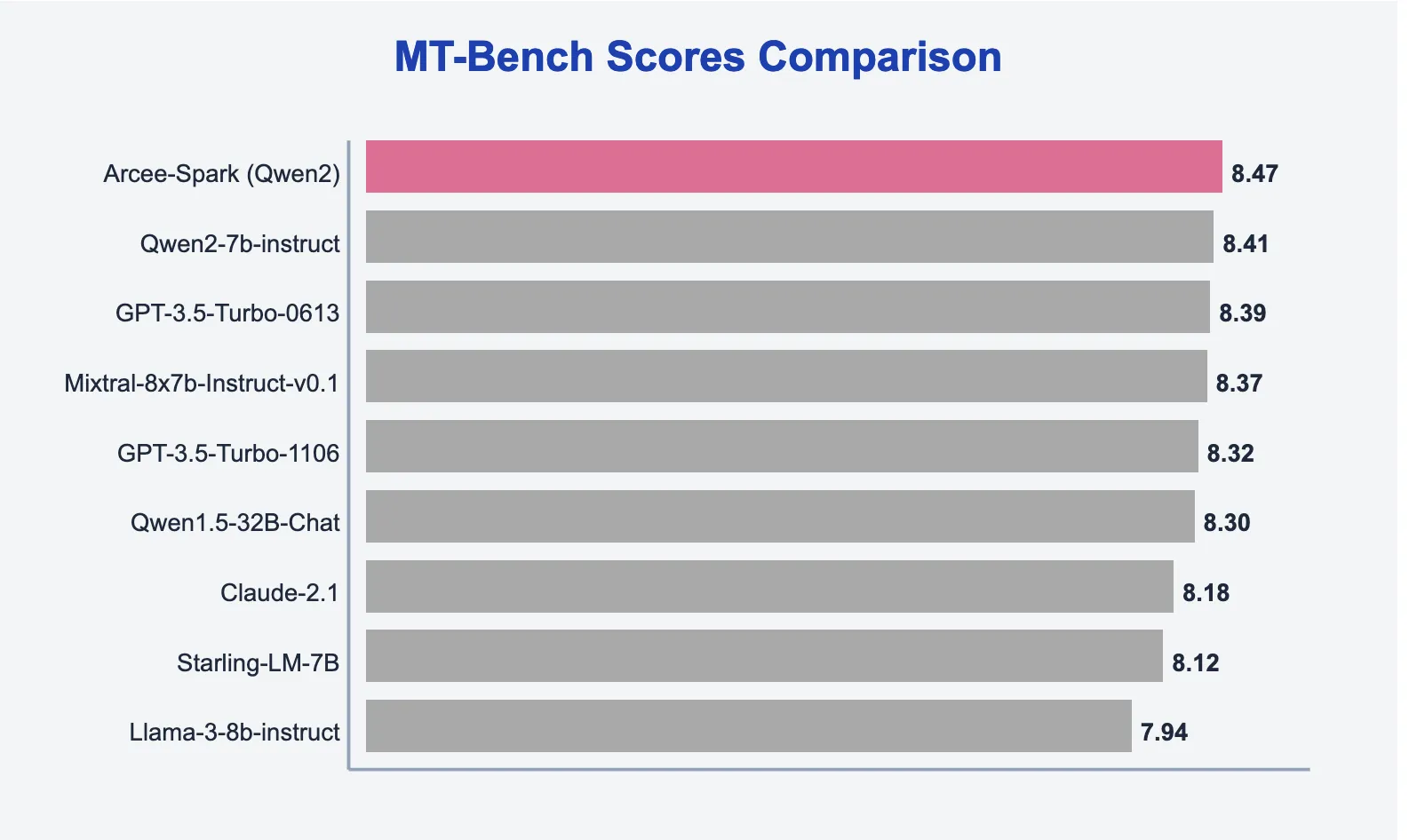

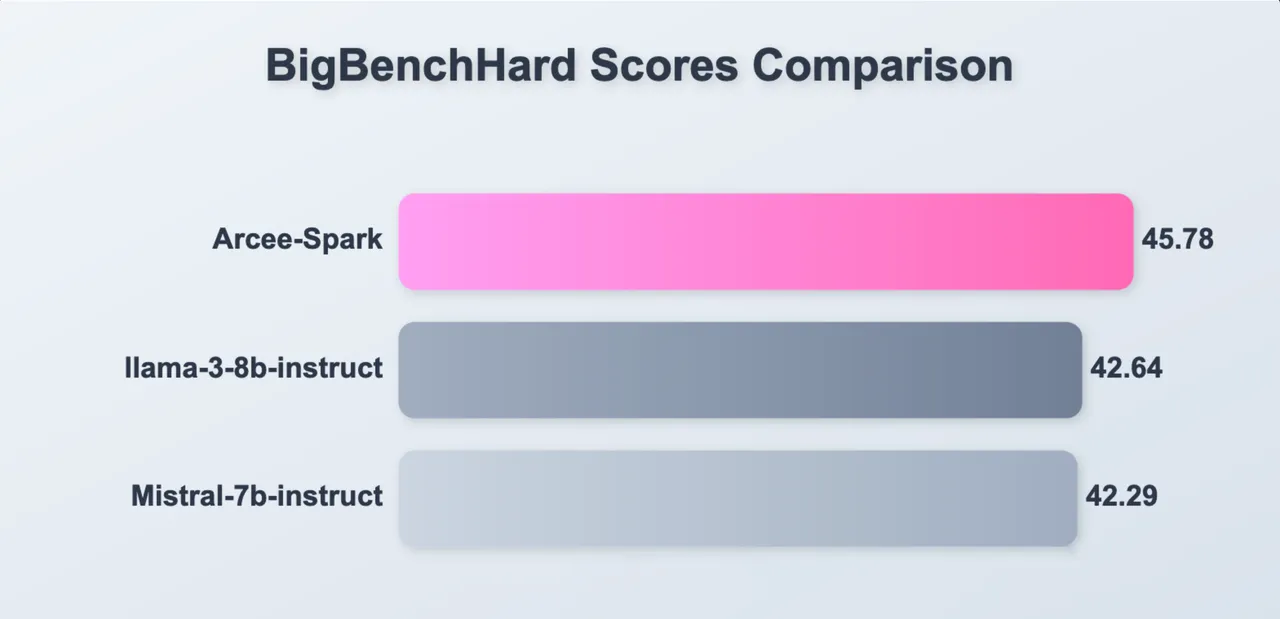

This meticulous process results in exceptional performance, with Arcee Spark achieving the highest score on MT-Bench for models of its size, outperforming even GPT-3.5 on many tasks.

Key Features

- 7B parameters

- State-of-the-art performance for its size

- Initialized from Qwen2

- Advanced training process including fine-tuning, merging, and DPO

- Highest MT-Bench score in the 7B class

- Outperforms GPT-3.5 on many tasks

- Has a context length of 128k tokens, making it ideal for tasks requiring many conversation turns or working with large amounts of text.

Business Use Cases

Arcee Spark offers a compelling solution for businesses looking to leverage advanced AI capabilities without the hefty computational requirements of larger models. Its unique combination of small size and high performance makes it ideal for:

- Real-time applications: Deploy Arcee Spark for chatbots, customer service automation, and interactive systems where low latency is crucial.

- Edge computing: Run sophisticated AI tasks on edge devices or in resource-constrained environments.

- Cost-effective scaling: Implement advanced language AI across your organization without breaking the bank on infrastructure or API costs.

- Rapid prototyping: Quickly develop and iterate on AI-powered features and products.

- On-premise deployment: Easily host Arcee Spark on local infrastructure for enhanced data privacy and security.

Performance and Efficiency

Arcee Spark demonstrates that bigger isn't always better in the world of language models. By leveraging advanced training techniques and architectural optimizations, it delivers:

- Speed: Blazing fast inference times, often 10-100x faster than larger models.

- Efficiency: Significantly lower computational requirements, reducing both costs and environmental impact.

- Flexibility: Easy to fine-tune or adapt for specific domains or tasks.

Despite its compact size, Arcee Spark offers deep reasoning capabilities, making it suitable for a wide range of complex tasks including:

- Advanced text generation

- Detailed question answering

- Nuanced sentiment analysis

- Complex problem-solving

- Code generation and analysis

Model Availability

- Quants: Arcee Spark GGUF

- FP32: For those looking to squeeze every bit of performance out of the model, we offer an FP32 version that scores slightly higher on all benchmarks.

Benchmarks and Evaluations

MT-Bench

########## First turn ##########

score

model turn

arcee-spark 1 8.777778

########## Second turn ##########

score

model turn

arcee-spark 2 8.164634

########## Average ##########

score

model

arcee-spark 8.469325EQ-Bench

EQ-Bench: 71.4

TruthfulQA

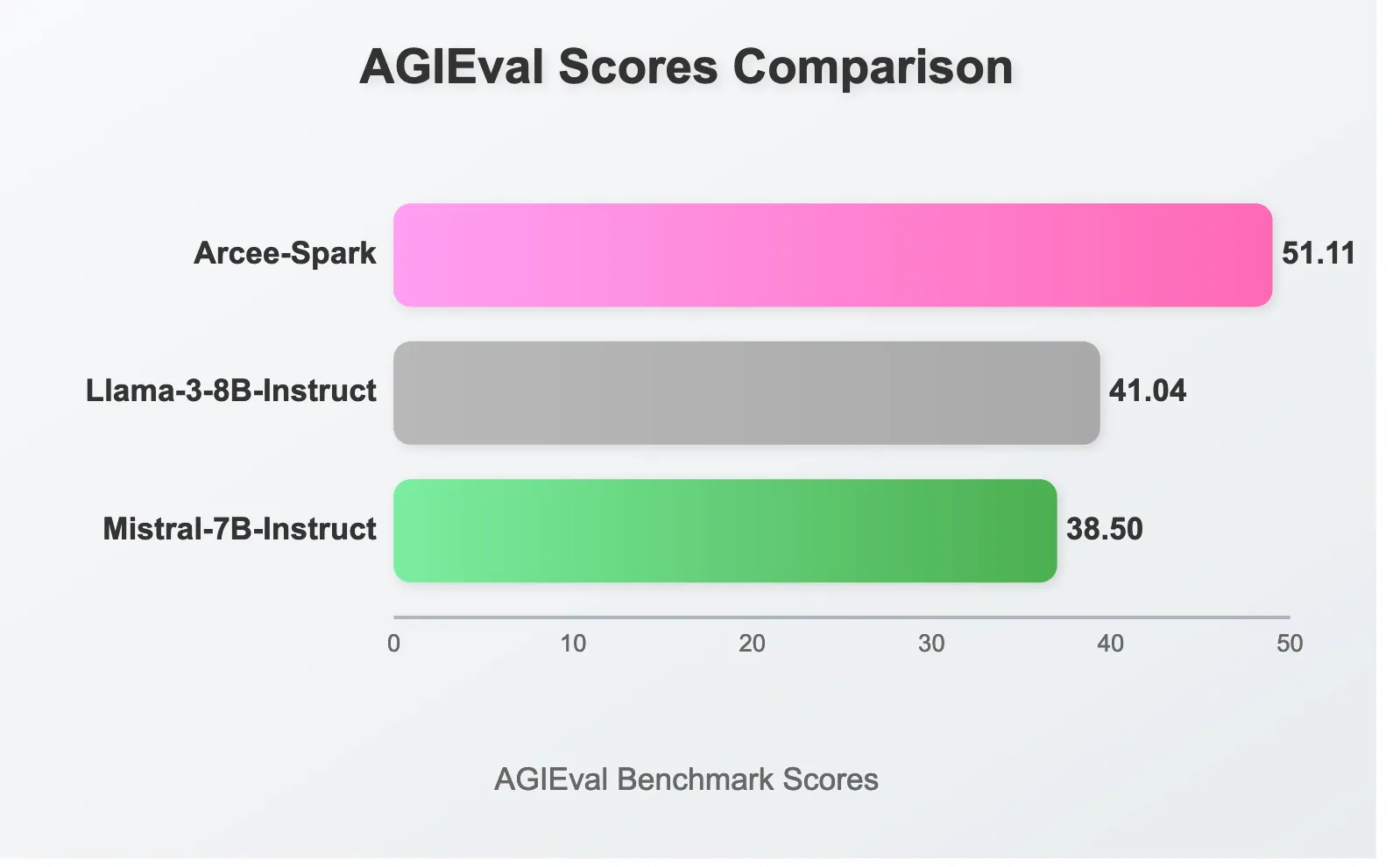

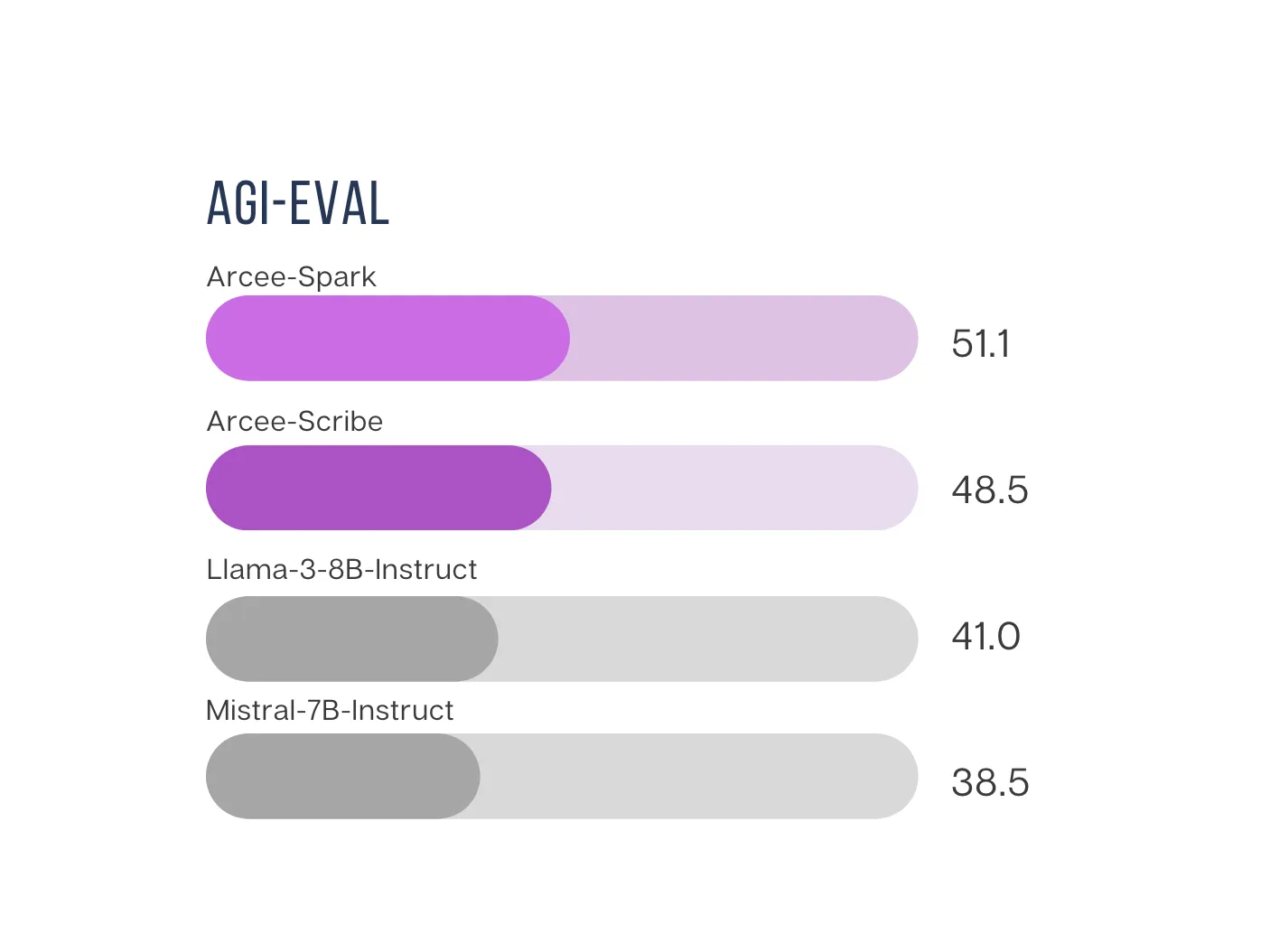

AGI-Eval

AGI-eval average: 51.11

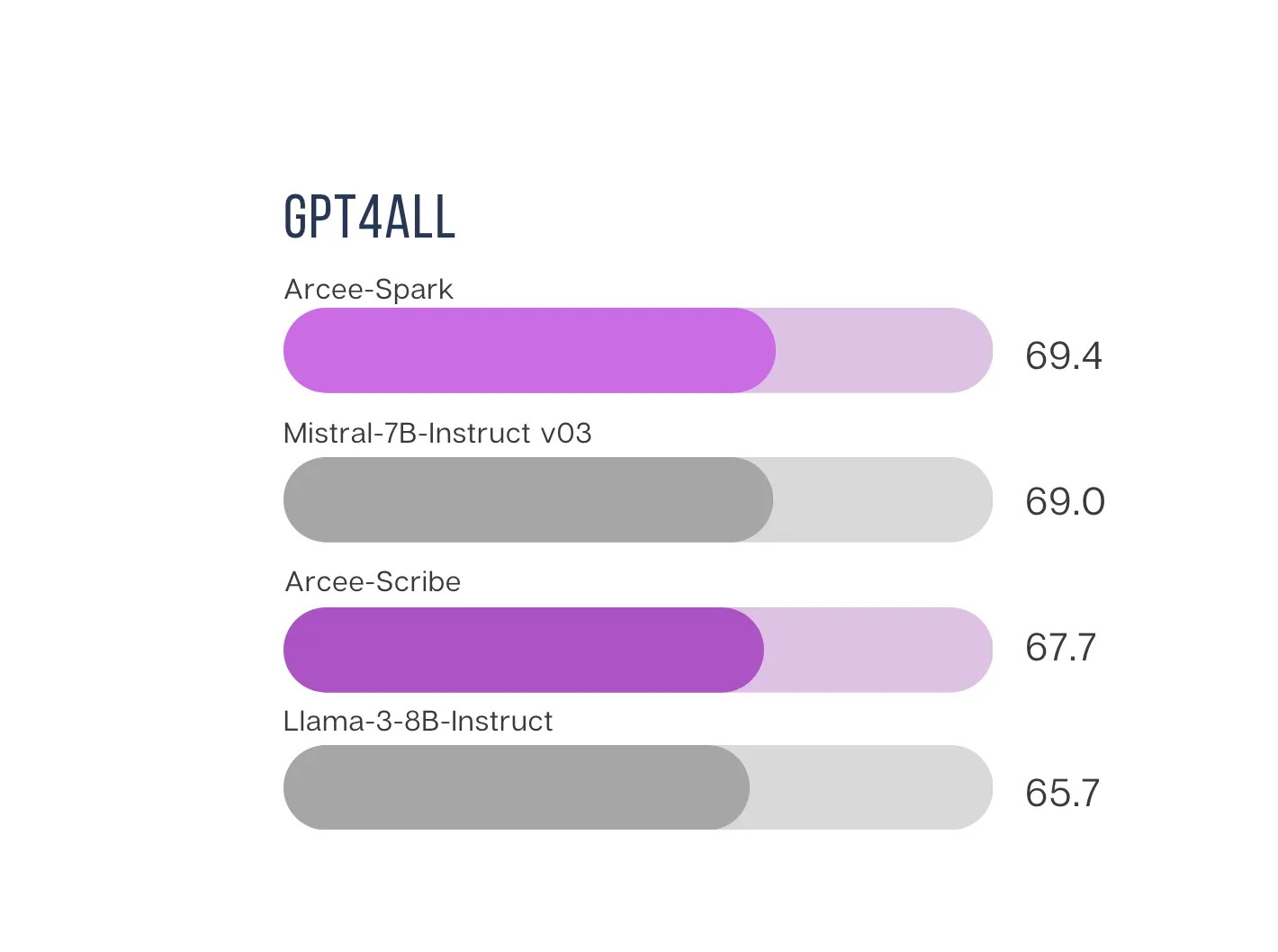

GPT4All Evaluation

Gpt4al Average: 69.37

Big Bench Hard

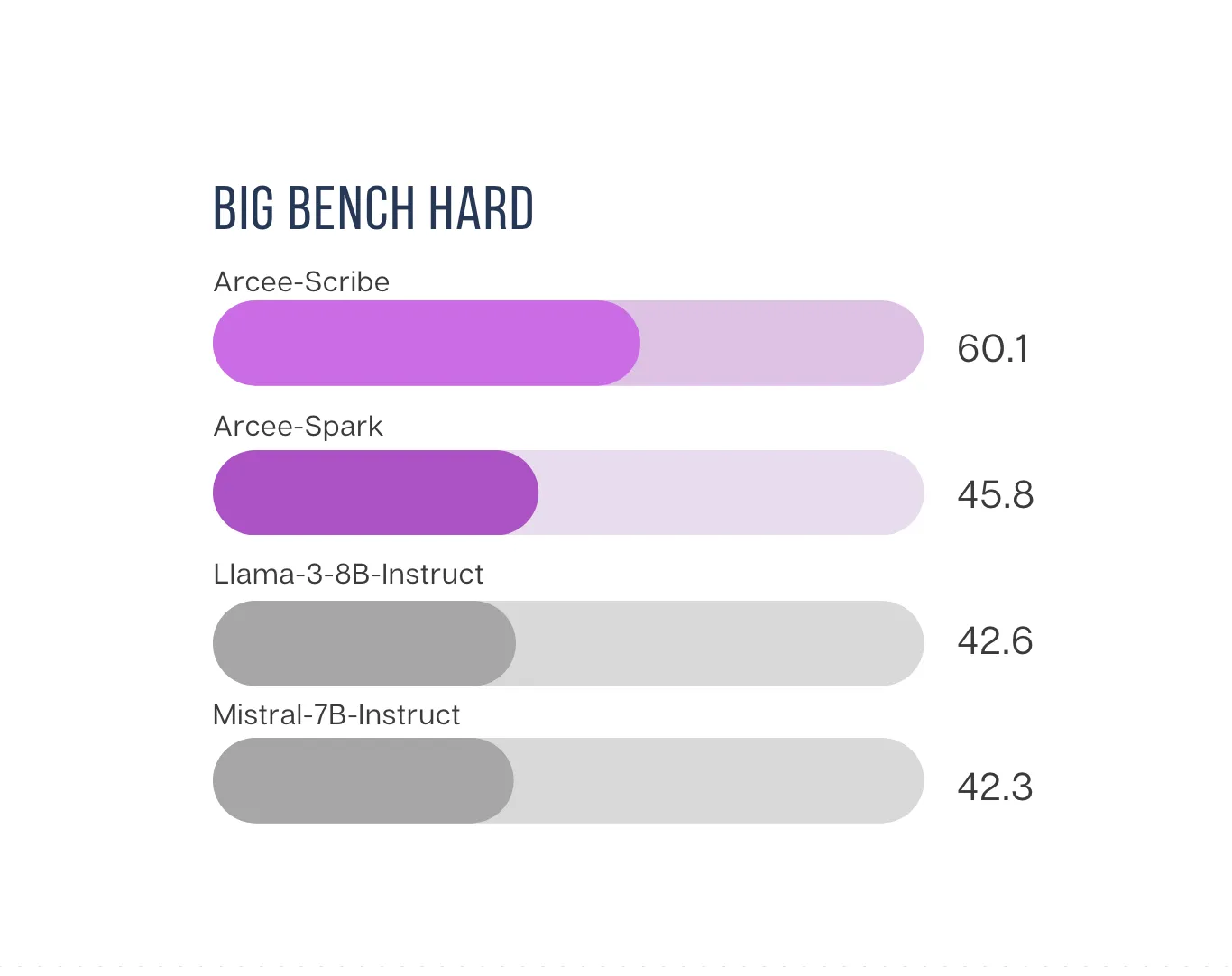

Big Bench average: 45.78

License

Arcee Spark is released under the Apache 2.0 license.

Acknowledgments

- The Qwen2 team for their foundational work

- The open-source AI community for their invaluable tools and datasets

- Our dedicated team of researchers and engineers who push the boundaries of what's possible with compact language models

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

Arcee-Miraj-Mini

Overview

Following the release of Arcee Meraj, our enterprise's globally top-performing Arabic LLM, we are thrilled to unveil Arcee Meraj Mini. This open-source model, meticulously fine-tuned from Qwen2.5-7B-Instruct, is expertly designed for both Arabic and English. This model has undergone rigorous evaluation across multiple benchmarks in both languages, demonstrating top-tier performance in Arabic and competitive results in English. Arcee Meraj Mini’s primary objective is to enhance Arabic capabilities while maintaining robust English language proficiency. Benchmark results confirm that Arcee Meraj Mini excels in Arabic, with English performance comparable to leading models — perfectly aligning with our vision for balanced bilingual strength.

Technical Details

Below is an overview of the key stages in Meraj Mini’s development:

- Data Preparation: We filter candidate samples from diverse English and Arabic sources to ensure high-quality data. Some of the selected English datasets are translated into Arabic to increase the quantity of Arabic samples and improve the model’s quality in bilingual performance. Then, new Direct Preference Optimization (DPO) datasets are continuously prepared, filtered, and translated to maintain a fresh and diverse dataset that supports better generalization across domains.

- Initial Training: We train the Qwen2.5 model with 7 billion parameters using these high-quality datasets in both languages. This allows the model to handle diverse linguistic patterns from over 500 million tokens, ensuring strong performance in Arabic and English tasks.

- Iterative Training and Post-Training: Iterative training and post-training iterations refine the model, enhancing its accuracy and adaptability to ensure it can perform well across varied tasks and language contexts.

- Evaluation: Arcee Meraj Mini is based on training and evaluating 15 different variants to explore optimal configurations, with assessments done on both Arabic and English benchmarks and leaderboards. This step ensures the model is robust in handling both general and domain-specific tasks.

- Final Model Creation: We select the best-performing variant and use the MergeKit library to merge the configurations, resulting in the final Arcee Meraj Mini model. This model is not only optimized for language understanding but also serves as a starting point for domain adaptation in different areas.

With this process, Arcee Meraj Mini is crafted to be more than just a general-purpose language model—it’s an adaptable tool, ready to be fine-tuned for specific industries and applications, empowering users to extend its capabilities for domain-specific tasks.

Capabilities and Use Cases

Arcee Meraj Mini is capable of solving a wide range of language tasks, including the tasks as below:

- Arabic Language Understanding: Arcee Meraj Mini excels in general language comprehension, reading comprehension, and common-sense reasoning, all tailored to the Arabic language, providing strong performance in a variety of linguistic tasks.

- Cultural Adaptation: The model ensures content creation that goes beyond linguistic accuracy, incorporating cultural nuances to align with Arabic norms and values, making it suitable for culturally relevant applications.

- Education: It enables personalized, adaptive learning experiences for Arabic speakers by generating high-quality educational content across diverse subjects, enhancing the overall learning journey.

- Mathematics and Coding: With robust support for mathematical reasoning and problem-solving, as well as code generation in Arabic, Arcee Meraj Mini serves as a valuable tool for developers and professionals in technical fields.

- Customer Service: The model facilitates the development of advanced Arabic-speaking chatbots and virtual assistants, capable of managing customer queries with a high degree of natural language understanding and precision.

- Content Creation: Arcee Meraj Mini generates high-quality Arabic content for various needs, from marketing materials and technical documentation to creative writing, ensuring impactful communication and engagement in the Arabic-speaking world.

Quantized GGUF

Here are GGUF models:

How to

This model uses ChatML prompt template:

<|im_start|>system

{System}

<|im_end|>

<|im_start|>user

{User}

<|im_end|>

<|im_start|>assistant

{Assistant}

# Use a pipeline as a high-level helper

from transformers import pipeline

messages = [

{"role": "user", "content": "مرحبا، كيف حالك؟"},

]

pipe = pipeline("text-generation", model="arcee-ai/Meraj-Mini")

pipe(messages)

# Load model directly

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("arcee-ai/Meraj-Mini")

model = AutoModelForCausalLM.from_pretrained("arcee-ai/Meraj-Mini")

Evaluations

Open Arabic LLM Leaderboard (OALL) Benchmarks

Arcee Meraj Mini model consistently outperforms state-of-the-art models on most of the Open Arabic LLM Leaderboard (OALL) benchmarks, highlighting its improvements and effectiveness in Arabic language content, and securing the top performing position on average among the other models.

Translated MMLU

We focused on the multilingual MMLU dataset, as distributed through the LM Evaluation Harness repository, to compare the multilingual strength of different models for this benchmark. Arcee Meraj Mini outperforms the other models, showcasing these models’ superior performance compared to the other state-of-the-art models.

English Benchmarks:

Arcee Meraj Mini performs comparably to state-of-the-art models, demonstrating how the model retains its English language knowledge and capabilities while learning Arabic.

Model Usage

For a detailed explanation of the model's capabilities, architecture, and applications, please refer to our blog post: https://blog.arcee.ai/arcee-meraj-mini-2/

To test the model directly, you can try it out using this Google Colab notebook: https://colab.research.google.com/drive/1hXXyNM-X0eKwlZ5OwqhZfO0U8CBq8pFO?usp=sharing

Acknowledgements

We are grateful to the open-source AI community for their continuous contributions and to the Qwen team for their foundational efforts on the Qwen2.5 model series.

Future Directions

As we release the Arcee Meraj Mini to the public, we invite researchers, developers, and businesses to engage with the Arcee Meraj Mini model, particularly in enhancing support for the Arabic language and fostering domain adaptation. We are committed to advancing open-source AI technology and invite the community to explore, contribute, and build upon Arcee Meraj Mini.

Arcee-Agent

Overview

Arcee Agent is a cutting-edge 7B parameter language model from arcee.ai specifically designed for function calling and tool use. Initialized from Qwen2-7B, it rivals the performance of much larger models while maintaining efficiency and speed. This model is particularly suited for developers, researchers, and businesses looking to implement sophisticated AI-driven solutions without the computational overhead of larger language models. Compute for training Arcee-Agent was provided by CrusoeAI. Arcee-Agent was trained using Spectrum.

Full model is available here: Arcee-Agent.

Key Features

- Advanced Function Calling: Arcee Agent excels at interpreting, executing, and chaining function calls. This capability allows it to interact seamlessly with a wide range of external tools, APIs, and services.

- Multiple Format Support: The model is compatible with various tool use formats, including:

- Glaive FC v2

- Salesforce

- Agent-FLAN

Arcee-Agent performs best when using the VLLM OpenAI FC format, but it also excels with prompt-based solutions. Agent-Spark can accommodate any specific use case or infrastructure needs you may have.

- Dual-Mode Functionality:

- Tool Router: Arcee Agent can serve as intelligent middleware, analyzing requests and efficiently routing them to appropriate tools or larger language models for processing.

- Standalone Chat Agent: Despite its focus on function calling, Arcee Agent is capable of engaging in human-like conversations and completing a wide range of tasks independently.

- Unparalleled Speed and Efficiency: With its 7B parameter architecture, Arcee Agent delivers rapid response times and efficient processing, making it suitable for real-time applications and resource-constrained environments.

- Competitive Performance: In function calling and tool use tasks, Arcee Agent competes with the capabilities of models many times its size, offering a cost-effective solution for businesses and developers.

Detailed Function Calling and Tool Use Capabilities

Arcee Agent's function calling and tool use capabilities open up a world of possibilities for AI-driven applications. Here's a deeper look at what you can achieve:

- API Integration: Seamlessly interact with external APIs, allowing your applications to:

- Fetch real-time data (e.g., stock prices, weather information)

- Post updates to social media platforms

- Send emails or SMS messages

- Interact with IoT devices

- Database Operations: Execute complex database queries and operations through natural language commands, enabling:

- Data retrieval and analysis

- Record updates and insertions

- Schema modifications

- Code Generation and Execution: Generate and run code snippets in various programming languages, facilitating:

- Quick prototyping

- Automated code review

- Dynamic script generation for data processing

- Multi-step Task Execution: Chain multiple functions together to complete complex tasks, such as:

- Booking travel arrangements (flights, hotels, car rentals)

- Generating comprehensive reports from multiple data sources

- Automating multi-stage business processes

Business Use Cases

Arcee Agent's unique capabilities make it an invaluable asset for businesses across various industries. Here are some specific use cases:

- Customer Support Automation:

- Implement AI-driven chatbots that handle complex customer inquiries and support tickets.

- Automate routine support tasks such as password resets, order tracking, and FAQ responses.

- Integrate with CRM systems to provide personalized customer interactions based on user history.

- Sales and Marketing Automation:

- Automate lead qualification and follow-up using personalized outreach based on user behavior.

- Generate dynamic marketing content tailored to specific audiences and platforms.

- Analyze customer feedback from various sources to inform marketing strategies.

- Operational Efficiency:

- Automate administrative tasks such as scheduling, data entry, and report generation.

- Implement intelligent assistants for real-time data retrieval and analysis from internal databases.

- Streamline project management with automated task assignment and progress tracking.

- Financial Services Automation:

- Automate financial reporting and compliance checks.

- Implement AI-driven financial advisors for personalized investment recommendations.

- Integrate with financial APIs to provide real-time market analysis and alerts.

- Healthcare Solutions:

- Automate patient record management and data retrieval for healthcare providers.

- E-commerce Enhancements:

- Create intelligent product recommendation systems based on user preferences and behavior.

- Automate inventory management and supply chain logistics.

- Implement AI-driven pricing strategies and promotional campaigns.

- Human Resources Automation:

- Automate candidate screening and ranking based on resume analysis and job requirements.

- Implement virtual onboarding assistants to guide new employees through the onboarding process.

- Analyze employee feedback and sentiment to inform HR policies and practices.

- Legal Services Automation:

- Automate contract analysis and extraction of key legal terms and conditions.

- Implement AI-driven tools for legal research and case law summarization.

- Develop virtual legal assistants to provide preliminary legal advice and document drafting.

- Educational Tools:

- Create personalized learning plans and content recommendations for students.

- Automate grading and feedback for assignments and assessments.

- Manufacturing and Supply Chain Automation:

- Optimize production schedules and inventory levels using real-time data analysis.

- Implement predictive maintenance for machinery and equipment.

- Automate quality control processes through data-driven insights.

Benchmarking

Intended Uses

Arcee Agent is designed for a wide range of applications where efficient function calling and tool use are crucial. Some potential use cases include:

- Developing sophisticated chatbots and virtual assistants with advanced tool integration

- Creating efficient middleware for routing and preprocessing requests to larger language models

- Implementing AI-driven process automation in resource-constrained environments

- Prototyping and testing complex tool-use scenarios without the need for more computationally expensive models

- Building interactive documentation systems that can execute code examples in real-time

- Developing intelligent agents for IoT device management and home automation

- Creating AI-powered research assistants for various scientific disciplines

Limitations

While Arcee Agent excels in its specialized areas, users should be aware of its limitations:

- The model's general knowledge and capabilities outside of function calling and tool use may be more limited compared to larger, general-purpose language models.

- Performance in tasks unrelated to its core functionalities may not match that of models with more diverse training.

- As with all language models, outputs should be validated and used responsibly, especially in critical applications.

- The model's knowledge cutoff date may limit its awareness of recent events or technological advancements.

Arcee-Lite

Overview

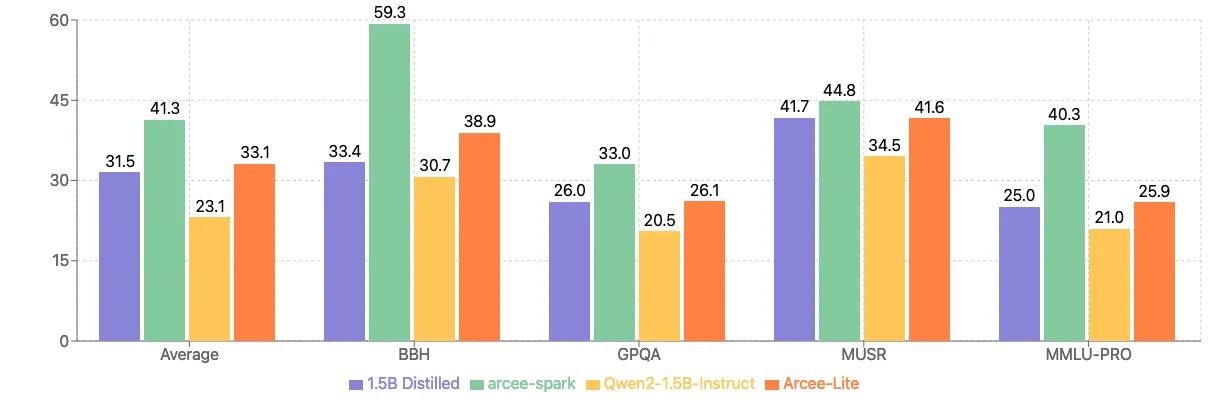

Arcee-Lite is a compact yet powerful 1.5B parameter language model developed as part of the DistillKit open-source project. Despite its small size, Arcee-Lite demonstrates impressive performance, particularly in the MMLU (Massive Multitask Language Understanding) benchmark.

GGUFS available here

Key Features

- Model Size: 1.5 billion parameters

- MMLU Score: 55.93

- Distillation Source: Phi-3-Medium

- Enhanced Performance: Merged with high-performing distillations

About DistillKit

DistillKit is our new open-source project focused on creating efficient, smaller models that maintain high performance. Arcee-Lite is one of the first models to emerge from this initiative.

Performance

Arcee-Lite showcases remarkable capabilities for its size:

- Achieves a 55.93 score on the MMLU benchmark

- Demonstrates exceptional performance across various tasks

Use Cases

Arcee-Lite is suitable for a wide range of applications where a balance between model size and performance is crucial:

- Embedded systems

- Mobile applications

- Edge computing

- Resource-constrained environments

Please note that our internal evaluations were consistantly higher than their counterparts on the OpenLLM Leaderboard - and should only be compared against the relative performance between the models, not weighed against the leaderboard.

Arcee-Nova

Overview

Arcee-Nova is our highest performing open source model. Evaluated on the same stack as the OpenLLM Leaderboard 2.0, making it the top-performing open source model tested on that stack. Its performance approaches that of GPT-4 from May 2023, marking a significant milestone.

Nova is a merge of Qwen2-72B-Instruct with a custom model tuned on a generalist dataset mixture.

GGUFs available here

Chat with Arcee-Nova here

Capabilities and Use Cases

Arcee-Nova excels across a wide range of language tasks, demonstrating particular strength in:

- Reasoning: Solving complex problems and drawing logical conclusions.

- Creative Writing: Generating engaging and original content across various genres.

- Coding: Assisting with programming tasks, from code generation to debugging.

- General Language Understanding: Comprehending and generating human-like text in diverse contexts.

Business Applications

Arcee-Nova can be applied to various business tasks:

- Customer Service: Implement sophisticated chatbots and virtual assistants.

- Content Creation: Generate high-quality written content for marketing and documentation.

- Software Development: Accelerate coding processes and improve code quality.

- Data Analysis: Enhance data interpretation and generate insightful reports.

- Research and Development: Assist in literature reviews and hypothesis generation.

- Legal and Compliance: Automate contract analysis and regulatory compliance checks.

- Education and Training: Create adaptive learning systems and intelligent tutoring programs.

Evaluations

Acknowledgments

We extend our gratitude to the open source AI community, whose collective efforts have paved the way for Arcee-Nova. Their commitment to transparency and collaboration continues to drive innovation. We also would like to extend our thanks to the Qwen team - without Qwen2-72B this would not be possible.

Future Directions

As we release Arcee-Nova to the public, we look forward to seeing how researchers, developers, and businesses will leverage its capabilities. We remain committed to advancing open source AI technology and invite the community to explore, contribute, and build upon Arcee-Nova.

Note: This README was written with assistance from Arcee-Nova.

Arcee-Scribe

Overview

Arcee-Scribe is a versatile chat model that excels across a wide range of benchmarks. While it didn't quite meet our exacting standards for Arcee-Spark V2, we found it too delightful to not release. It's our strongest model to date in terms of reasoning on the Big-Bench Hard (BBH) dataset, and we discovered it to be particularly adept at creative writing tasks. The model is a merge of InternLM-2.5-chat with a custom InternLM finetune, incorporating both general and writing-specific data.

Capabilities and Use Cases

Arcee-Scribe functions as a writing assistant and can handle a variety of tasks. Here are some examples of its capabilities:

1. Challenging Dialogue: Crafting nuanced conversations with subtext and complex emotions.

Example:

Character A (an optimist who is usually the first one to bring up solutions, even in difficult situations):

"Maybe it's time we should figure this out, y'know? Like, how we both really feel about what we want from our future. It's been... quite a ride, hasn't it?"

Character B (a reserved person who prefers to let things unfold, but is deeply affected by what's said):

"Yeah, it's... it's been interesting. You've got such a way of making everything sound like it's gonna be all right. But I... I'm not sure it's that simple."

Character A (trying to see the lighter side, attempting to lighten the mood without making things awkward):

"You mean, I'm like a magician's rabbit? Poof, and everything's gonna be just fine? But hey, I like the sound of that. We could use a bit of magic, right?"

Character B (acknowledging the tension, but avoiding the conversation directly):

"I think what you mean is that I'm trying to see the silver lining in every cloud. And you're right, it's... it's a bit like that."

Character A (feeling the need to reiterate, hoping to gently steer the conversation toward a positive direction):

"Well, I mean, we both know it's been challenging. But what I'm trying to say is, maybe we could find a way to make things better. Because, y'know, that's what we've always done. We've always found a way to navigate through the rough waters, haven't we?"

Character B (feeling the weight of their unspoken emotions, hinting at a resignation to their fate):

"That's... that's true. But sometimes, no matter how hard we try, we can't make the wind change. Maybe it's time to look at what's right in front of us, instead of always looking towards what's beyond the horizon."

Character A (realizing the gravity of the unspoken words, attempting to bridge the gap without acknowledging the breakup directly):

"You know, life's been a series of chapters, and I think we've had our fair share of adventures. Maybe it's time for us to start writing a new story—one where we find a way to walk side by side, even if it's on different paths."

Character B (acknowledging the poignant conversation, but still avoiding the explicit mention of a breakup):

"Yeah, chapters and stories. You always have such a way with words, making everything sound so... so beautiful. But sometimes, the most beautiful stories are the ones we write by ourselves."2. Integrating Technical Concepts: Explaining complex ideas using creative analogies.

Example:

In the quaint heart of a bustling city, there was a bakery renowned not only for its delectable pastries but for its mysterious, enigmatic nature. The bakery, run by the eccentric Mr. Quantum, was a place where the ordinary merged with the extraordinary in a dance of flours and creams.

In this bakery, there were two magical eggs, one white and one blue. These eggs were intertwined in a bond so strong and peculiar that when one was cracked open, the contents inside mirrored the other's contents in an instant. Just as a baker might watch with wonder as dough miraculously doubled in size, the bakers at the Quantum Bakery would stand in awe as the contents of these eggs shared the same fate, no matter the distance separating them.

These eggs represented quantum entanglement, a phenomenon where two or more particles become interconnected in such a way that the state of one instantly influences the state of the other, no matter how far apart they may be. Just as the contents of the magical eggs were indistinguishably alike, the particles in a quantum entanglement remain intertwined, their states dependent on each other's, transcending the limitations of space and time.

The Quantum Bakery was a place where bakers and patrons alike could witness this extraordinary connection firsthand. It was a testament to the boundless possibilities of the quantum world, where the unseen forces at work could create wonders that defied the logic of the everyday.

Through the metaphor of the magical eggs, we see that quantum entanglement is not merely a scientific concept, but a dance of particles that defies the conventions of the physical world, much like the artistry of baking at the Quantum Bakery. It is a reminder that the universe, with its infinite mysteries, continues to astound and inspire, challenging us to look beyond the surface and discover the marvels that lie within.3. Complex Narrative Structures: Creating stories with non-linear narratives or unique perspectives.

Example:

Arcee-Scribe: "The Unraveling"

The old man closed his eyes, a peaceful smile on his face. The tattered photograph slipped from his fingers.

Moments earlier, he had gazed at the image - a young couple, laughing by a lakeside. His wrinkled hand traced her face.

That morning, he'd found the photo while clearing out his attic, buried in a dusty box he hadn't opened in decades.

Fifty years before, he'd shoved that box into the attic's darkest corner, determined to forget.

Fifty years and one day earlier, he'd received the telegram. "We regret to inform you..."

A week before that, she'd waved goodbye, boarding a train in her crisp nurse's uniform. "I'll be back before you know it," she'd said.

And at the very beginning, on a sunny day by the lake, a young man asked a question. She said yes, and they laughed, unaware of the camera capturing their joy.Business Applications

Arcee-Scribe can be applied to various business tasks:

- Content Creation: Develop blog posts, articles, and social media content.

- Product Descriptions: Generate clear and engaging product descriptions.

- Customer Communication: Draft responses to customer inquiries or complaints.

- Training Materials: Create scenarios for employee training programs.

- Brainstorming: Generate ideas for new products or marketing campaigns.

Evaluations

Acknowledgments

We owe a great deal of credit to the wonderful pre/post-training work done by the Internlm team. Their incredible work has enabled us to deliver models of this caliber. If you wish to use Arcee-Scribe for commercial purposes, InternLM allows free commercial usage - simply fill out this form: https://wj.qq.com/s2/12727483/5dba/

Future Directions

We look forward to seeing how users will utilize Arcee-Scribe in their creative and professional endeavors. The model aims to assist businesses in their content creation processes and provide a tool for exploring new ideas in writing and communication.

Note: This README was written in large part by Arcee-Scribe.

Arcee-SEC

Overview

Llama-3-SEC-Base: A Domain-Specific Chat Agent for SEC Data Analysis

Llama-3-SEC-Base is a state-of-the-art domain-specific large language model trained on a vast corpus of SEC (Securities and Exchange Commission) data. Built upon the powerful Meta-Llama-3-70B-Instruct model, Llama-3-SEC-Base has been developed to provide unparalleled insights and analysis capabilities for financial professionals, investors, researchers, and anyone working with SEC filings and related financial data. This checkpoint does not include supervised fine-tuning (SFT) and is strictly the our CPT model merged with Llama-3-70B-Instruct. For a variant that has been fine-tuned for chat-related purposes, please see Llama-3-SEC-Chat.

Model Details

- Base Model: Meta-Llama-3-70B-Instruct

- Training Data: This is an intermediate checkpoint of our final model, which has seen 20B tokens so far. The full model is still in the process of training. The final model is being trained with 72B tokens of SEC filings data, carefully mixed with 1B tokens of general data from Together AI's RedPajama dataset: RedPajama-Data-1T to maintain a balance between domain-specific knowledge and general language understanding

- Training Method: Continual Pre-Training (CPT) using the Megatron-Core framework, followed by model merging with the base model using the state-of-the-art TIES merging technique in the Arcee Mergekit toolkit

- Training Infrastructure: AWS SageMaker HyperPod cluster with 4 nodes, each equipped with 32 H100 GPUs, ensuring efficient and scalable training of this massive language model

Use Cases

Llama-3-SEC-Base is designed to assist with a wide range of tasks related to SEC data analysis, including but not limited to:

- In-depth investment analysis and decision support

- Comprehensive risk management and assessment

- Ensuring regulatory compliance and identifying potential violations

- Studying corporate governance practices and promoting transparency

- Conducting market research and tracking industry trends

The model's deep understanding of SEC filings and related financial data makes it an invaluable tool for anyone working in the financial sector, providing powerful natural language processing capabilities tailored to the specific needs of this domain.

Evaluation

To ensure the robustness and effectiveness of Llama-3-SEC-Base, the model has undergone rigorous evaluation on both domain-specific and general benchmarks. Key evaluation metrics include:

- Domain-specific perplexity, measuring the model's performance on SEC-related data

- Extractive numerical reasoning tasks, using subsets of TAT-QA and ConvFinQA datasets

- General evaluation metrics, such as BIG-bench, AGIEval, GPT4all, and TruthfulQA, to assess the model's performance on a wide range of tasks

These results demonstrate significant improvements in domain-specific performance while maintaining strong general capabilities, thanks to the use of advanced CPT and model merging techniques.

Training and Inference

Llama-3-SEC-Base has uses the llama3 chat template, which allows for efficient and effective fine-tuning of the model on the SEC data. This template ensures that the model maintains its strong conversational abilities while incorporating the domain-specific knowledge acquired during the CPT process.

To run inference with the Llama-3-SEC-Base model using the llama3 chat template, use the following code:

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda"

model_name = "arcee-ai/Llama-3-SEC-Base"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

prompt = "What are the key regulatory considerations for a company planning to conduct an initial public offering (IPO) in the United States?"

messages = [

{"role": "system", "content": "You are an expert financial assistant - specializing in governance and regulatory domains."},

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(device)

generated_ids = model.generate(

model_inputs.input_ids,

max_new_tokens=512

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]Limitations and Future Work

This release represents the initial checkpoint of the Llama-3-SEC-Base model, trained on 20B tokens of SEC data. Additional checkpoints will be released in the future as training on the full 70B token dataset is completed. Future work will focus on further improvements to the CPT data processing layer, exploration of advanced model merging techniques, and alignment of CPT models with SFT, DPO, and other cutting-edge alignment methods to further enhance the model's performance and reliability.

Usage

The model is available for both commercial and non-commercial use under the Llama-3 license. We encourage users to explore the model's capabilities and provide feedback to help us continuously improve its performance and usability. For more information - please see our detailed blog on Llama-3-SEC-Base.

Citation

If you use this model in your research or applications, please cite:

@misc{Introducing_SEC_Data_Chat_Agent,

title={Introducing the Ultimate SEC Data Chat Agent: Revolutionizing Financial Insights},

author={Shamane Siriwardhana and Luke Mayers and Thomas Gauthier and Jacob Solawetz and Tyler Odenthal and Anneketh Vij and Lucas Atkins and Charles Goddard and Mary MacCarthy and Mark McQuade},

year={2024},

note={Available at: \url{firstname@arcee.ai}},

url={URL after published}

}For further information or inquiries, please contact the authors at their respective email addresses (firstname@arcee.ai). We look forward to seeing the exciting applications and research that will emerge from the use of Llama-3-SEC-Base in the financial domain.

Virtuoso-Lite

Overview

Virtuoso-Lite (10B) is our next-generation, 10-billion-parameter language model based on the Llama-3 architecture. It is distilled from Deepseek-v3 using ~1.1B tokens/logits, allowing it to achieve robust performance at a significantly reduced parameter count compared to larger models. Despite its compact size, Virtuoso-Lite excels in a variety of tasks, demonstrating advanced reasoning, code generation, and mathematical problem-solving capabilities.

GGUF

Quantizations available here

Model Details

- Architecture Base: Falcon-10B (based on Llama-3)

- Parameter Count: 10B

- Tokenizer:

- Initially integrated with Deepseek-v3 tokenizer for logit extraction.

- Final alignment uses the Llama-3 tokenizer, with specialized “tokenizer surgery” for cross-architecture compatibility.

- Distillation Data:

- ~1.1B tokens/logits from Deepseek-v3’s training data.

- Logit-level distillation using a proprietary “fusion merging” approach for maximum fidelity.

- License: falcon-llm-license

Background on Deepseek Distillation

Deepseek-v3 serves as the teacher model, from which we capture logits across billions of tokens. Rather than standard supervised fine-tuning, Virtuoso-Lite applies a full logit-level replication to preserve the most crucial insights from the teacher. This approach enables:

- Strong performance on technical/scientific queries

- Enhanced code generation and debugging

- Improved consistency in math-intensive tasks

Intended Use Cases

- Chatbots & Virtual Assistants

- Lightweight Enterprise Data Analysis

- Research Prototypes & Proofs of Concept

- STEM Educational Tools (where smaller footprint is advantageous)

Evaluations

How to Use

Below is a sample code snippet using transformers:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "arcee-ai/virtuoso-lite"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

prompt = "Provide a concise summary of quantum entanglement."

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=150)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))Training & Fine-Tuning

- Initial Training: Began with Falcon-10B, optimized for large-scale text ingestion.

- Distillation & Merging:

- Trained on ~1.1B tokens/logits from Deepseek-v3.

- Employed “fusion merging” to capture detailed teacher insights.

- Final step included DPO to enhance alignment and mitigate hallucinations.

- Future Developments: We plan to incorporate additional R1 distillations to further improve specialized performance and reduce model footprint.

Performance

Virtuoso-Lite demonstrates strong results across multiple benchmarks (e.g., BBH, MMLU-PRO, MATH), often standing its ground against models with higher parameter counts. This efficiency is largely credited to logit-level distillation, which compresses the teacher model’s capabilities into a more parameter-friendly package.

Limitations

- Context Length: 32k Tokens (may vary depending on the final tokenizer settings and system resources).

- Knowledge Cut-off: Training data may not reflect the latest events or developments beyond June 2024.

Ethical Considerations

- Content Generation Risks: Like any language model, Virtuoso-Lite can generate potentially harmful or biased content if prompted in certain ways.

License

Virtuoso-Lite (10B) is released under the falcon-llm-license License. You are free to use, modify, and distribute this model in both commercial and non-commercial applications, subject to the terms and conditions of the license.

If you have questions or would like to share your experiences using Virtuoso-Lite (10B), please connect with us on social media. We’re excited to see what you build—and how this model helps you innovate!

Virtuoso-Medium-v2

Overview

Virtuoso-Medium-v2 (32B) is our next-generation, 32-billion-parameter language model that builds upon the original Virtuoso-Medium architecture. This version is distilled from Deepseek-v3, leveraging an expanded dataset of 5B+ tokens worth of logits. It achieves higher benchmark scores than our previous release (including surpassing Arcee-Nova 2024 in certain tasks).

GGUF

Quantizations available here

Model Details

- Architecture Base: Qwen-2.5-32B

- Parameter Count: 32B

- Tokenizer:

- Initially integrated with Deepseek-v3 tokenizer for logit extraction.

- Final alignment uses the Qwen tokenizer, using specialized “tokenizer surgery” for cross-architecture compatibility.

- Distillation Data:

- ~1.1B tokens/logits from Deepseek-v3’s training data.

- Logit-level distillation using a proprietary “fusion merging” approach afterwards for maximum fidelity.

- License: Apache-2.0

Background on Deepseek Distillation

Deepseek-v3 serves as the teacher model, from which we capture logits across billions of tokens. Rather than standard supervised fine-tuning, we apply a full logit-level replication. This ensures more precise transference of knowledge, including advanced reasoning in:

- Technical and scientific queries

- Complex code generation

- Mathematical problem-solving

Intended Use Cases

- Advanced Chatbots & Virtual Assistants

- Enterprise Data Analysis & Workflow Automation

- Research Simulations & Natural Language Understanding

- Educational Tools for STEM Fields

Evaluations

How to Use

Below is a sample code snippet using transformers:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "arcee-ai/Virtuoso-Medium-v2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

prompt = "Provide a concise summary of quantum entanglement."

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_new_tokens=150)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))Training & Fine-Tuning

- Initial Training: Began with Qwen-32B, calibrated for large-scale text ingestion.

- Distillation & Merging:

- Trained on ~1.1B tokens worth of Deepseek-v3 logits.

- Employed “fusion merging” to retain as much teacher expertise as possible.

- Final step included DPO to improve alignment and reduce model hallucinations.

- Continuous Development: Additional R1 distillations are in progress to further enhance performance and specialization.

Performance

Thanks to a larger parameter count and a richer training corpus, Virtuoso-Medium-v2 delivers high scores across multiple benchmarks (BBH, MMLU-PRO, MATH, etc.). It frequently surpasses other 30B+ models and even some 70B+ architectures in specific tasks.

Limitations

- Context Length: 128k Tokens

- Knowledge Cut-off: Training data may not reflect the latest events or developments, leading to gaps in current knowledge beyond June 2024.

Ethical Considerations

- Content Generation Risks: Like any language model, Virtuoso-Medium-v2 can potentially generate harmful or biased content if prompted in certain ways.

License

Virtuoso-Medium-v2 (32B) is released under the Apache-2.0 License. You are free to use, modify, and distribute this model in both commercial and non-commercial applications, subject to the terms and conditions of the license.

If you have questions or would like to share your experiences using these models, please connect with us on social media. We’re excited to see what you build—and how these models help you innovate!

Open-Source Libraries

Built by our researchers for you.

MergeKit

Overview

Expanded Model Support: Merge Anything, Merge Faster

Meet MergeKit v0.1, which dramatically expands the range of models you can merge. No longer are you limited to specific architectures explicitly supported by MergeKit. This release introduces two game-changing improvements:

- Arbitrary transformers models: MergeKit now seamlessly handles any model architecture supported by the popular transformers library. This means you can merge cutting-edge models as soon as they're released, including vision-language models like LLaVa or QwenVL, alongside the diverse collection of decoder-only models already supported. No more waiting for MergeKit to "catch up"–you're empowered to merge immediately.

- Raw PyTorch Models: Beyond transformers models, MergeKit now supports merging raw PyTorch models. This opens up a world of possibilities, allowing you to merge models serialized with either torch.save (pickle) or safetensors. The new mergekit-pytorch entrypoint lets you merge diffusion models (like Stable Diffusion or FLUX), audio models (like Whisper), computer vision models, and virtually any other PyTorch model you can imagine. As with transformers models, the models being merged must be of the same architecture and size.

Arcee Fusion: The Art of Selective Merging

This release also introduces the public availability of Arcee Fusion, a sophisticated merging method previously used internally to develop our Supernova, Medius, and Virtuoso series models. Arcee Fusion takes a more intelligent approach to merging, focusing on the importance of differences between models rather than simply merging everything indiscriminately. Arcee Fusion works in three key stages:

- Importance Scoring: Instead of blindly merging all parameters, Arcee Fusion calculates an importance score for each parameter, combining the absolute difference between model parameters with a divergence measure based on softmax distributions and KL divergence. This ensures that only meaningful changes are considered.

- Dynamic Thresholding: The algorithm analyzes the distribution of importance scores, calculating key quantiles (median, Q1, and Q3) and setting a dynamic threshold using median + 1.5 × IQR (a standard technique for outlier detection). This intelligently filters out less significant changes.

- Selective Integration: A fusion mask is created based on the importance scores and the threshold. Only the most significant elements are incorporated into the base model, ensuring that the merge process is adaptive and selective. This preserves the base model's stability while integrating the most valuable updates from the other model.

Arcee Fusion avoids the pitfalls of over-updating that can occur with simple averaging, providing a more refined and controlled merging experience. You can activate this powerful new method by specifying merge_method: arcee_fusion in your merge configuration file.

Multi-GPU Execution

MergeKit v0.1 introduces a new --parallel flag for multi-GPU execution. If you have access to a multi-GPU environment, this flag will unlock a near-linear speedup for your merge operations. The --parallel flag is compatible with all merge methods and model types, significantly reducing merge times and boosting your productivity.

Licensing: Balancing Open Access with Continued Development

Finally, we want to address a change to our licensing model. While we are committed to open access, we also need to ensure the long-term sustainability of MergeKit's development. Therefore, we are transitioning to a Business Source License (BSL).

Why are we doing this?

The techniques and methods we've developed are valuable and unique. We believe in the power of open source and want the community to benefit from our work. However, unrestricted commercial use by large entities could jeopardize our ability to continue developing and improving MergeKit. The BSL is a balanced approach that allows us to share our innovations while protecting our long-term viability.

What does this mean for you?

For the vast majority of users (personal, research, and non-commercial), nothing changes. You retain unrestricted access to MergeKit. Even most commercial users will likely be unaffected. The BSL primarily applies to large corporations and highly successful startups using MergeKit in a production setting. If this applies to you, we'll simply need to discuss a commercial license (which includes direct access to Charles Goddard and the MergeKit development team).

We believe this approach strikes the right balance between fostering open innovation and ensuring the continued growth and development of MergeKit. We want to make it clear: we want you to use MergeKit! This licensing change is about ensuring we can continue to provide you with the best possible tool for model merging.

DistillKit

Overview

DistillKit is an open-source research effort in model distillation by Arcee.AI. Our goal is to provide the community with easy-to-use tools for researching, exploring, and enhancing the adoption of open-source Large Language Model (LLM) distillation methods. This release focuses on practical, effective techniques for improving model performance and efficiency.

Features

- Logit-based Distillation (models must be the same architecture)

- Hidden States-based Distillation (models can be different architectures)

- Support for Supervised Fine-Tuning (SFT) - DPO and CPT to come at a later date.

Installation

Quick Install

For a quick and easy installation, you can use our setup script:

./setup.shManual Installation

If you prefer to install dependencies manually, follow these steps:

1. Install basic requirements:

pip install torch wheel ninja packaging2. Install Flash Attention:

pip install flash-attn3. Install DeepSpeed:

pip install deepspeed4. Install remaining requirements:

pip install -r requirements.txtConfiguration

For simplicity, we've set the config settings directly within the training script. You can customize the configuration as follows:

config = {

"project_name": "distil-logits",

"dataset": {

"name": "mlabonne/FineTome-100k", # Only sharegpt format is currently supported.

"split": "train",

# "num_samples": , # You can pass a number here to limit the number of samples to use.

"seed": 42

},

"models": {

"teacher": "arcee-ai/Arcee-Spark",

"student": "Qwen/Qwen2-1.5B"

},

"tokenizer": {

"max_length": 4096,

"chat_template": "{% for message in messages %}{% if loop.first and messages[0]['role'] != 'system' %}{{ '<|im_start|>system\nYou are a helpful assistant.<|im_end|>\n' }}{% endif %}{{'<|im_start|>' + message['role'] + '\n' + message['content'] + '<|im_end|>' + '\n'}}{% endfor %}{% if add_generation_prompt %}{{ '<|im_start|>assistant\n' }}{% endif %}"

},

"training": {

"output_dir": "./results",

"num_train_epochs": 3,

"per_device_train_batch_size": 1,

"gradient_accumulation_steps": 8,

"save_steps": 1000,

"logging_steps": 1,

"learning_rate": 2e-5,

"weight_decay": 0.05,

"warmup_ratio": 0.1,

"lr_scheduler_type": "cosine",

"resume_from_checkpoint": None, # Set to a path or True to resume from the latest checkpoint

"fp16": False,

"bf16": True

},

"distillation": {

"temperature": 2.0,

"alpha": 0.5

},

"model_config": {

"use_flash_attention": True

}

# "spectrum": {

# "layers_to_unfreeze": "/workspace/spectrum/snr_results_Qwen-Qwen2-1.5B_unfrozenparameters_50percent.yaml" # You can pass a spectrum yaml file here to freeze layers identified by spectrum.

# }

}Chat Template

If you want to use a chat template other than chatml, copy it from the model's tokenizer_config.json, and replace the current chat_template entry in the configuration.

Spectrum Integration

You can use Spectrum to increase speed (but not memory overhead). To enable Spectrum, uncomment the "spectrum" section in the configuration and provide the path to your Spectrum YAML file. Please note that further evaluations with Spectrum are TBD.

Usage

To launch DistillKit, use the following command:

accelerate launch distil_logits.pyYou can replace distil_logits.py with whichever script you want to use.

Advanced Configurations

If you wish to use DeepSpeed, Fully Sharded Data Parallel (FSDP), or Megatron sharding, you can set up your configuration using:

accelerate configFollow the prompts to configure your desired setup.

DeepSpeed Configurations

We provide sample DeepSpeed configuration files in the ./deepspeed_configs directory. These configurations are shamelessly stolen from the Axolotl (thanks to Wing Lian and the Axolotl team for their excellent work!).

To use a specific DeepSpeed configuration, you can specify it in your accelerate config.

Distillation Methods

DistillKit supports two primary distillation methods:

- Logit-based Distillation: This method transfers knowledge from a larger teacher model to a smaller student model by using both hard targets (actual labels) and soft targets (teacher logits). The soft target loss, computed using Kullback-Leibler (KL) divergence, encourages the student to mimic the teacher's output distribution. This method enhances the student model's generalization and efficiency while maintaining performance closer to the teacher model.

- Hidden States-based Distillation: This method involves transferring knowledge by aligning the intermediate layer representations of the student model with those of the teacher model. This process enhances the student's learning by providing richer, layer-wise guidance, improving its performance and generalization. This method allows for cross-architecture distillation, providing flexibility in model architecture choices.

Performance and Memory Requirements

While the implementation of DistillKit is relatively straightforward, the memory requirements for distillation are higher compared to standard SFT. We are actively working on scaling DistillKit to support models larger than 70B parameters, which will involve advanced techniques and efficiency improvements.

Experimental Results

Our experiments have shown promising results in both general-purpose and domain-specific tasks. Key findings include:

- Both logit-based and hidden states-based distillation methods show improvements over standard SFT across most benchmarks.

- Significant performance gains were observed when distilling models for domain-specific tasks.

- Using the same training dataset for distillation as was used for the teacher model can lead to higher performance gains.

For detailed results and analysis, please refer to our case studies and experimental here.

Arcee-Labs

This release marks the debut of Arcee-Labs, a division of Arcee.ai dedicated to accelerating open-source research. Our mission is to rapidly deploy resources, models, and research findings to empower both Arcee and the wider community. In an era of increasingly frequent breakthroughs in LLM research, models, and techniques, we recognize the need for agility and adaptability. Through our efforts, we strive to significantly contribute to the advancement of open-source AI technology and support the community in keeping pace with these rapid developments.

Future Directions

We are excited to see how the community will use and improve DistillKit. Future releases will include Continued Pre-Training (CPT) and Direct Preference Optimization (DPO) distillation methods. We welcome community contributions in the form of new distillation methods, training routine improvements, and memory optimizations.

Contributing

We welcome contributions from the community! If you have ideas for improvements, new features, or bug fixes, please feel free to open an issue or submit a pull request.

Contact

For more information about Arcee.AI and our training platform, visit our website at https://arcee.ai.

For technical questions or support, please open an issue in this repository.

Acknowledgments

While our work is ultimately quite different - this project was inspired by Towards Cross-Tokenizer Distillation: the Universal Logit Distillation Loss for LLMs. We thank the authors for their efforts and contributions. We would like to thank the open-source community and all at arcee.ai who have helped make DistillKit possible. We're just getting started.

EvolKit

Overview

EvolKit is an framework for automatically enhancing the complexity of instructions used in fine-tuning Large Language Models (LLMs). Our project aims to revolutionize the evolution process by leveraging open-source LLMs, moving away from closed-source alternatives.

Key Features

- Automatic instruction complexity enhancement

- Integration with open-source LLMs

- Streamlined fine-tuning process

- Support for various datasets from Hugging Face

- Flexible configuration options for optimization

Installation

To set up EvolKit, follow these steps:

1. Clone the repository:

git clone https://github.com/arcee-ai/EvolKit.git

cd EvolKit2. Install the required dependencies:

pip install -r requirements.txtUsage

To run the AutoEvol script, use the following command structure:

python run_evol.py --dataset <dataset_name> [options]Required Parameters:

--dataset <dataset_name>: The name of the dataset on Hugging Face to use.--model <model_name>: Model to use for evolving instructions.--generator <generator_type>: Type of generator to use ('openrouter' or 'vllm').--batch_size <int>: Number of instructions to process in each batch.--num_methods <int>: Number of evolution methods to use.--max_concurrent_batches <int>: Maximum number of batches to process concurrently (in our experiment, a cluster of 8xH100 hosting Qwen2-72B-Instruct-GPTQ-Int8 can handle batch size of 50 concurrently).--evolve_epoch <int>: Maximum number of epochs for evolving each instruction.--output_file <filename>: Name of the output file to save results.

Optional Parameters:

--dev_set_size <int>: Number of samples to use in the development set. Use -1 for no devset. Default is -1. (We do not recommend using a dev set since it will take much more time to finish each round)--use_reward_model: Flag to use a reward model for evaluation. No value required.

Models

We found 2 models that work very well with this pipeline:

- Qwen2-72B-Instruct and DeepSeek-V2.5 (GPTQ and AWQ versions are fine too).

- Other models might work but it has to be very good at generating structured content (in order to parse using parsing operations)

VLLM Support

To use VLLM as the backend, set the VLLM_BACKEND environment variable:

export VLLM_BACKEND=http://your-vllm-backend-url:port/v1If not set, it will default to 'http://localhost:8000/v1'.

Example Usage:

To run AutoEvol on the 'small_tomb' dataset with custom parameters:

python run_evol.py --dataset qnguyen3/small_tomb --model Qwen/Qwen2-72B-Instruct-GPTQ-Int8 --generator vllm --batch_size 100 --num_methods 3 --max_concurrent_batches 10 --evolve_epoch 3 --output_file the_tomb_evolved-3e-batch100.json --dev_set_size 5 --use_reward_modelThis command will:

- Load the 'qnguyen3/small_tomb' dataset from Hugging Face.

- Use the Qwen2-72B-Instruct model with VLLM as the generator.

- Process samples in batches of 100.

- Apply 3 evolution methods for each instruction.

- Process 10 batches concurrently.

- Evolve each instruction for up to 3 epochs.

- Use 5 samples for the development set.

- Use the reward model for evaluation.

- Output the final evolved instructions to the_tomb_evolved-3e-batch100.json.

After evolving the instructions, you can generate answers using:

python gen_answers.py --model Qwen/Qwen2-72B-Instruct-GPTQ-Int8 --generator vllm --data_path the_tomb_evolved-3e-batch100.json --batch_size 50 --output completed_evol_data.jsonThe final dataset will be saved to completed_evol_data.json in ShareGPT format.

Components

EvolKit consists of several key components:

- Generator: Uses an LLM for generating initial instructions (OpenRouter or VLLM).

- Evolver: Employs a recurrent evolution strategy.

- Analyzer: Utilizes trajectory analysis.

- Evaluator: Offers two options:

- Reward Model Evaluator

- Failure Detector Evaluator (originally from WizardLM's paper)

- Optimizer: Optimizes the evolution method for the next round.

Output