Building Madeline-s1, a World Class Reasoning Model

How Arcee AI helped Madeline build a world-class reasoning model from first principles.

Merging for pre-training, data privacy in healthcare, and language support

MergeKit is the industry-leading tool for Model Merging, a technique that enables you to combine several pre-trained models into a smaller, more efficient model without requiring additional training (and without the need for a GPU). Merging preserves the original capabilities of models while enhancing AI performance and versatility. Arcee designed MergeKit to be both powerful and accessible, putting the power of model merging into the hands of builders of all levels.

In this article, we’ll distill the lessons learned from three use cases of model merging published in research papers. Whether you’re interested in improving accuracy, creating domain-specific models, or incorporating advanced reasoning capabilities into diverse language models, we’ll share how model merging plays an important role in these use cases.

While model merging is often discussed in the context of post-training, this paper's authors investigate the use of model merging techniques during the pre-training process, a largely unexplored area of research. Pre-training merging typically involves merging checkpoints from a single training run. However, researchers’ access to these intermediate checkpoints is limited, even with open models, which limits their understanding of the utility of merging in pre-training. Builders of DeepSeek and LLaMA-3 leveraged model merging in the development of their models, but they didn’t disclose the exact techniques.

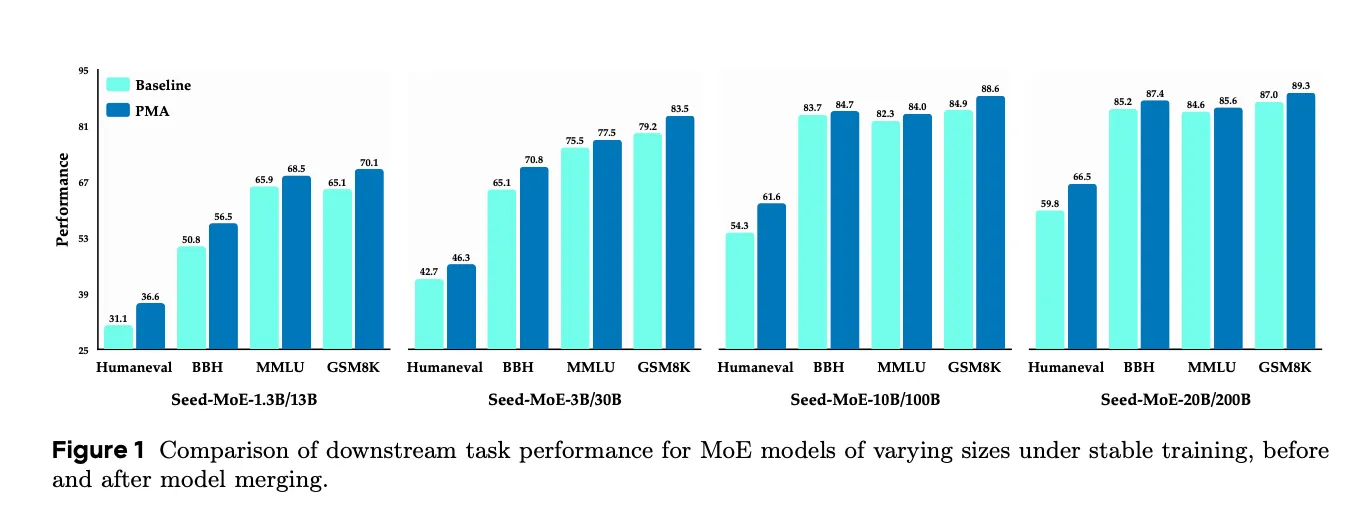

In this work, the authors introduce the concept of Pre-trained Model Average (PMA), a strategy for merging model-level weights during pre-training. Across a diverse set of LLMs of varying sizes and architectures, the authors found that merging checkpoints from the stable training phase results in consistent and significant improvements in performance. They also discovered that PMA models initialized with PMA for supervised fine-tuning demonstrated a more stable GradNorm metric compared to the baseline, in addition to reductions in the frequency of loss spikes relative to the baseline. The final performance was not impacted, and the method remained robust across various learning rates.

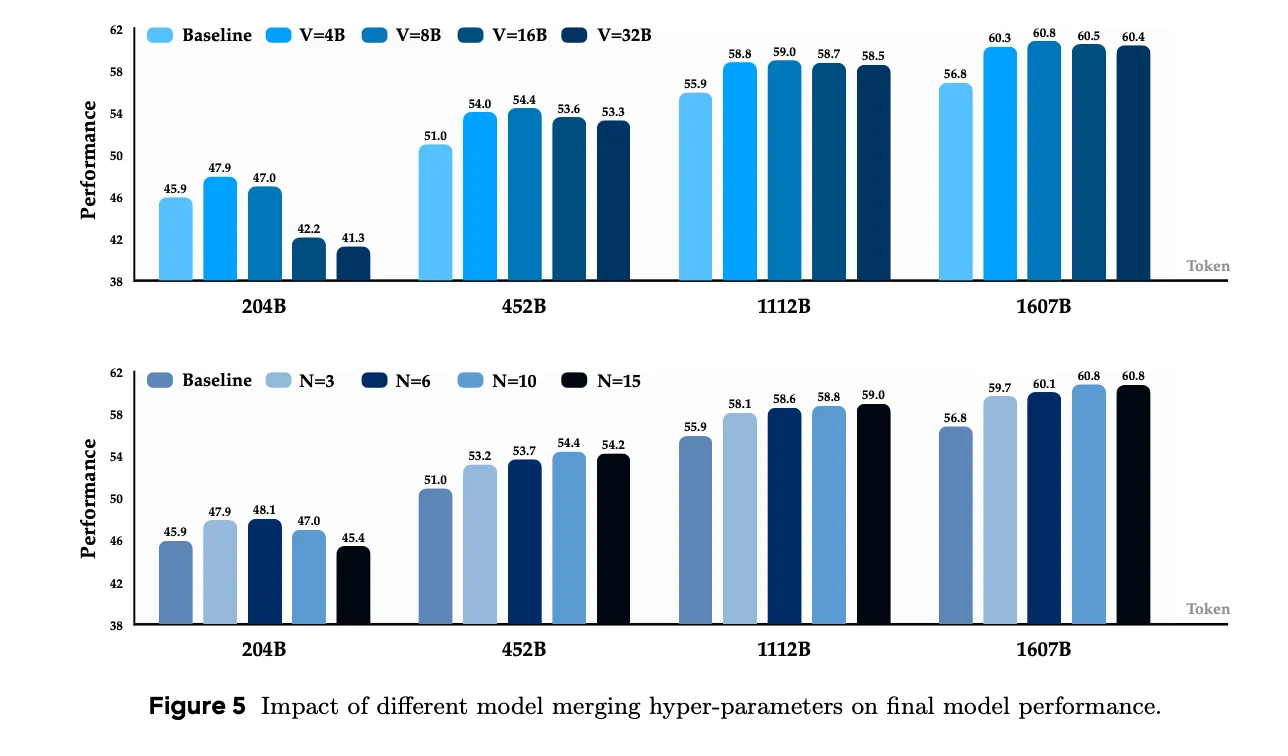

Additionally, the authors performed ablation studies to understand the effect of intervals in the training process, specifically the time at which models are merged and the number of models merged. Intuitively, they found that large intervals resulted in lower performance, likely due to large weight disparities between early-stage and late-stage training, while merging a large number of models consistently led to performance improvements.

Finally, they found that pre-training with a constant learning rate combined with model merging can match the performance of models trained with learning rate decay, making training more efficient and providing a way to estimate final performance earlier in the process.

At Arcee, we leveraged this pre-training method in the development of our AFM-4.5B, the first Arcee Foundation Model. To build on this technique with more advanced merging, MergeKit also offers Arcee Fusion, a method for selective merging that focuses on the most significant differences between models, rather than indiscriminately merging all parameters. Using important scores for parameters, dynamic thresholding, and selective integration, only the most significant elements are incorporated into the base model, thereby improving results compared to merges based on simple averaging.

Key Takeaway: Model merging is not only useful in the post-training phase, but can be a powerful tool to increase model performance in the pre-training phase, stabilize the training process, and monitor the pre-training process to simulate model performance after annealing.

Healthcare, as an industry, frequently faces challenges related to data access and privacy, making the development of domain-specific models through fine-tuning a challenging and often costly endeavor. In this work, the authors present PatientDx, a framework for model merging that enables the design of domain-specific large language models (LLMs) for predictive health tasks, without requiring fine-tuning or any adaptation of patient data.

Using MIMIC-IV, an open electronic health record dataset, they evaluated the implications of model merging across predictive tasks and the pitfalls of fine-tuning, such as data leakage. Additionally, they explored the use of merged models for downstream tasks, such as identifying relevant keywords for patient profiles to improve tagging and the identification of similar patients.

Given that patient data consists of demographic and clinical features (age, lab values, diagnoses, time series data) in diverse formats (ranges, values, strings), the authors identified two key observations. First, an LLM tasked with predictive analysis on patient health record data needs to have a backbone model adapted to numerical reasoning, such as DART-math. Second, merged math and general-purpose models outperform either model type alone on predictive tasks, suggesting that an optimal merged model exists for these tasks. Thus, the authors optimized task performance by tuning the merge ratios between selected models, without the need for adaptation on patient data.

Using the model soup and spherical linear interpolation (SLerp) merge methods, the authors examined whether merged models are more effective than input models for mortality prediction. Specifically, they merged Mistral and Llama models with various combinations of biology, instruct, and math fine-tuned LLMs. The result? Their 8B merged models showed a 7% improvement in AUROC compared to the initial models. They also found that the risk of data leakage was reduced in PatientDx and similar models that were not fine-tuned. Merging did not have a significant impact on information retrieval in downstream tasks, but it is an area that warrants further exploration.

This work represents a validation of what the open-source community has long known and experimented with: the power of merging as a means to develop powerful, domain-specific models, particularly with limited training data.

Key Takeaway: Model merging can be a powerful approach for developing domain-specific models in healthcare, eliminating the need to train on patient data. By tuning the merge ratio of pre-trained models carefully selected for the task at hand, you can achieve strong task-specific performance while minimizing privacy risks and data acquisition costs.

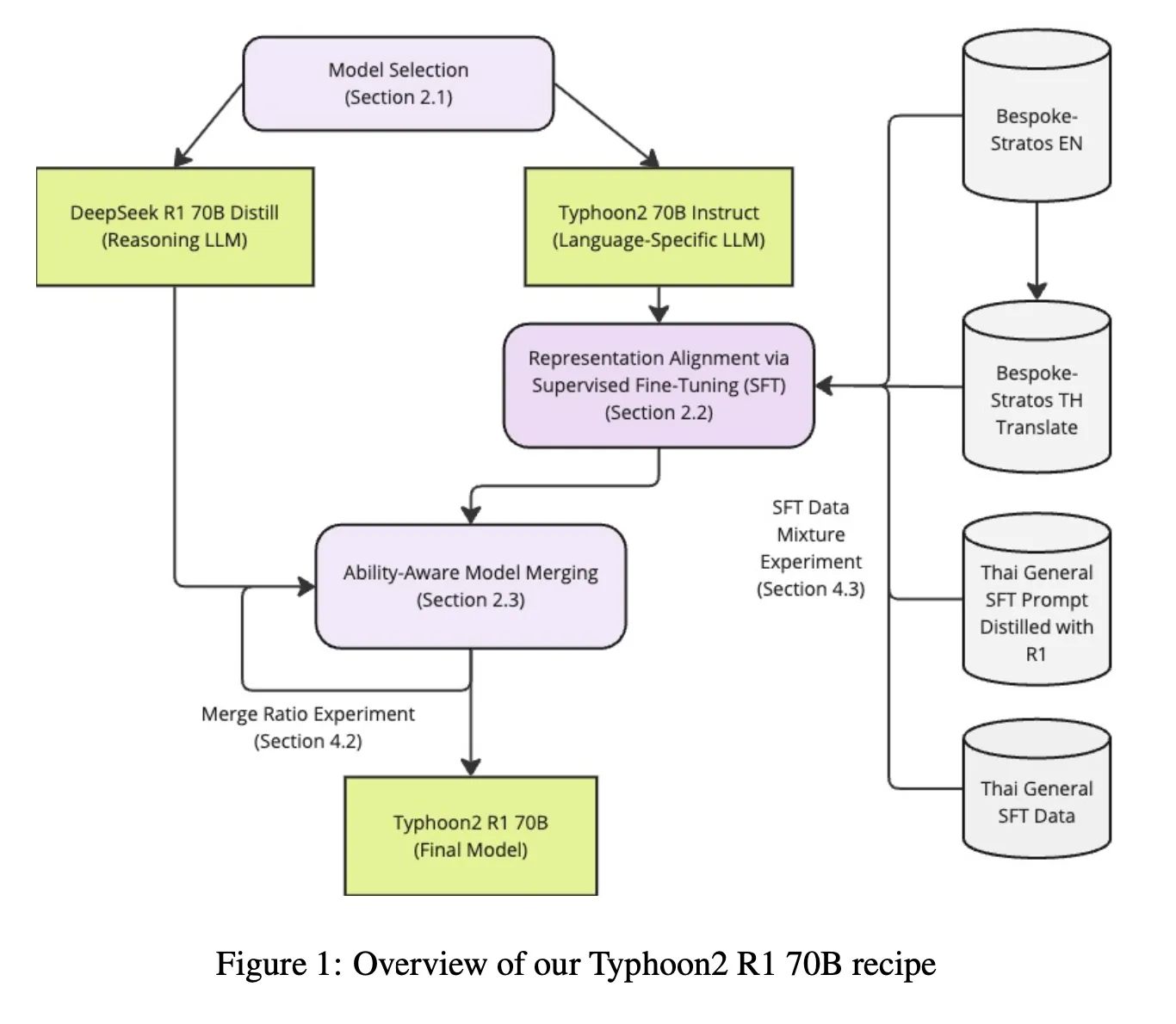

With the recent advancements in LLMs, particularly in their reasoning ability and performance on more complex tasks, low-resource languages remain underserved in the model space. Despite the strong performance of models like Llama and Qwen on low-resource language benchmarks, the authors note that in practice, these models present frequent issues with character usage and code switching. Continuous pre-training and post-training approaches can be used to address this gap; however, data-centric approaches present challenges related to training data quality and quantity in distilling language-specific reasoning into models. The authors of this work propose an open recipe for adapting low-resource language models (LLMs) to enhance their reasoning capabilities through model merging, the use of publicly available datasets, and a modest computational budget.

The merging recipe consisted of DeepSeek R1 70B and Typhoon2 70B Instruct, a Thai language-specific LLM. The merge ratio was optimized to enhance the reasoning capability of the language-specific models while still maintaining strong performance in the target language. Their two-phase approach consisted of:

To assess reasoning capabilities, the merged model was benchmarked on AIME 2024, MATH-500, and LiveCodeBench. Language-specific performance was evaluated using IFEval, MT-Bench, Language accuracy, as well as Think accuracy to evaluate reasoning traces.

Models were merged using the DARE-linear method, optimizing for the merge ratio between the fine-tuned Thai model and the reasoning model. Through experimentation, they discovered that gradually reducing the ratio of the reasoning model in the later layers increased performance on language-specific tasks while preserving the model’s reasoning abilities. They also explored the impact of the data mixture on the fine-tuning of the language model and its overall effect on performance, including the addition of reasoning traces, the proportion of Thai data, and the inclusion of a general instruction dataset.

Their final model achieved performance within 4% of the language-specific model, with a 12.8% boost across all tasks compared to the reasoning model.

At Arcee, we have conducted extensive work on multilingual adaptation, utilizing advanced model merging and post-training techniques, including ability-aware merging. In fact, we leveraged ability-aware merging in the development of a reasoning model for Madeline & Co., an end-to-end AI-powered strategy, design, and innovation platform helping users navigate complex decisions with clarity and confidence. Read more about how we trained their model here.

Key Takeaway: Ability-aware merging can be a powerful approach to adapting reasoning and language-based models, enabling each model to leverage its strengths at the appropriate layers. Coupled with fine-tuning, it is possible to enhance the reasoning abilities of LLMs for low-resource languages using only publicly available data and model merging.

The papers highlighted here confirm what has been known and practiced in the open-source community and at Arcee for years, making model merging one of the best and most cost-effective ways to develop better models.

Ready to explore the power of MergeKit in your business? Get in touch with us to learn more about how our team can help you merge and deploy models for your specific use case.